Everything matters...also in machine learning: some pointers from ReproNim¶

Peer Herholz (he/him)

Research Assistant Professor - Northwestern University

Research affiliate - NeuroDataScience lab at MNI/McGill,

UNIQUE,

Max-Planck-Institute for Human Cognitive and Brain Sciences

Member - BIDS, ReproNim, Brainhack, Neuromod, OHBM SEA-SIG

![]()

![]()

![]()

![]()

![]() @peerherholz

@peerherholz

Let's imagine the following scenario:

Your PI tells you to run some machine learning analyses on your data (because buzz words and we all need top tier publications and that sweet grant money). Specifically, you should use resting state connectivity data to predict the age of participants (sounds familiar, eh?). So you go ahead, gather some data, apply a random forest (a form of decision tree) and ...

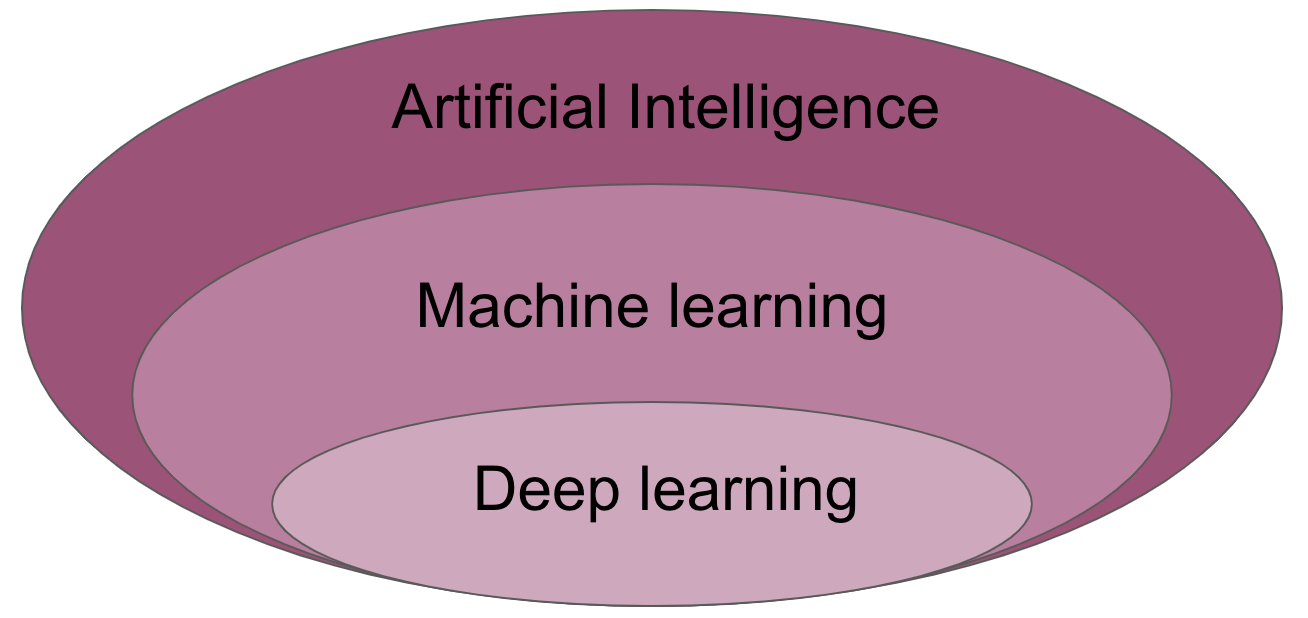

Whoa, let's go back a few steps and actually briefly define what we're talking about here, starting with machine learning.

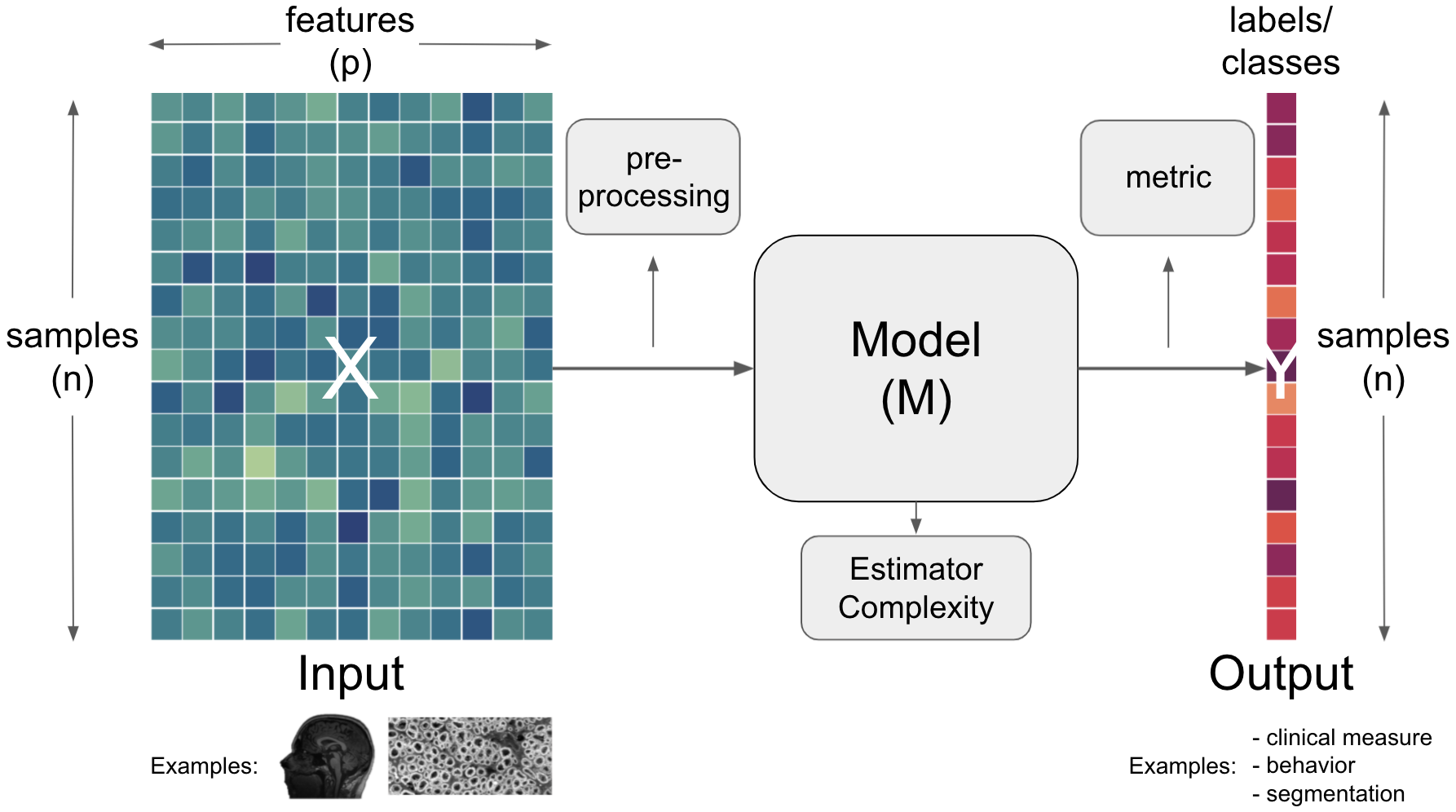

and also its components & steps:

Now, let's actually check how this looks like. At first we get the data:

import urllib.request

url = 'https://www.dropbox.com/s/v48f8pjfw4u2bxi/MAIN_BASC064_subsamp_features.npz?dl=1'

urllib.request.urlretrieve(url, 'MAIN2019_BASC064_subsamp_features.npz')

('MAIN2019_BASC064_subsamp_features.npz',

<http.client.HTTPMessage at 0x10823ff10>)

and then inspect it:

import numpy as np

data = np.load('MAIN2019_BASC064_subsamp_features.npz')['a']

data.shape

(155, 2016)

We will also visualize it to better grasp what's going on:

import plotly.express as px

from IPython.display import display, HTML

from plotly.offline import init_notebook_mode, plot

fig = px.imshow(data, labels=dict(x="features (whole brain connectome connections)", y="participants"),

height=800, aspect='None')

fig.update(layout_coloraxis_showscale=False)

init_notebook_mode(connected=True)

fig.show()

#plot(fig, filename = 'input_data.html')

#display(HTML('input_data.html'))

Beside the input data we also need our labels:

url = 'https://www.dropbox.com/s/ofsqdcukyde4lke/participants.csv?dl=1'

urllib.request.urlretrieve(url, 'participants.csv')

('participants.csv', <http.client.HTTPMessage at 0x117c13fd0>)

Which we then load and check as well:

import pandas as pd

labels = pd.read_csv('participants.csv')['AgeGroup']

labels.describe()

count 155 unique 6 top 5yo freq 34 Name: AgeGroup, dtype: object

For a better intuition, we’re going to also visualize the labels and their distribution:

fig = px.histogram(labels, marginal='box', template='plotly_white')

fig.update_layout(showlegend=False, width=800, height=600)

init_notebook_mode(connected=True)

fig.show()

#plot(fig, filename = 'labels.html')

#display(HTML('labels.html'))

And we’re ready to create our machine learning analysis pipeline using scikit-learn within we will scale our input data, train a Random Forest and test its predictive performance. We import the required functions and classes:

from sklearn.preprocessing import StandardScaler

from sklearn.ensemble import RandomForestClassifier

from sklearn.pipeline import make_pipeline

from sklearn.model_selection import GroupShuffleSplit, cross_validate, cross_val_score

from sklearn.metrics import accuracy_score

and setup an scikit-learn pipeline:

pipe = make_pipeline(

StandardScaler(),

RandomForestClassifier()

)

That's all we need to run the analysis, computing accuracy and mean absolute error:

acc_val_rf = cross_validate(pipe, data, pd.Categorical(labels).codes, cv=10, return_estimator =True)

acc_rf = cross_val_score(pipe, data, pd.Categorical(labels).codes, cv=10)

mae_rf = cross_val_score(pipe, data, pd.Categorical(labels).codes, cv=10,

scoring='neg_mean_absolute_error')

which we can then inspect for each CV fold:

for i in range(10):

print(

'Fold {} -- Acc = {}, MAE = {}'.format(i, np.round(acc_rf[i], 3), np.round(-mae_rf[i], 3))

)

Fold 0 -- Acc = 0.438, MAE = 1.062 Fold 1 -- Acc = 0.562, MAE = 0.875 Fold 2 -- Acc = 0.5, MAE = 1.5 Fold 3 -- Acc = 0.562, MAE = 1.062 Fold 4 -- Acc = 0.562, MAE = 1.188 Fold 5 -- Acc = 0.533, MAE = 1.267 Fold 6 -- Acc = 0.6, MAE = 0.8 Fold 7 -- Acc = 0.667, MAE = 0.933 Fold 8 -- Acc = 0.467, MAE = 0.533 Fold 9 -- Acc = 0.4, MAE = 1.333

and overall:

print('Accuracy = {}, MAE = {}, Chance = {}'.format(np.round(np.mean(acc_rf), 3),

np.round(np.mean(-mae_rf), 3),

np.round(1/len(labels.unique()), 3)))

Accuracy = 0.529, MAE = 1.055, Chance = 0.167

That's a pretty good performance, eh? The amazing power of machine learning! But there's more: you also try out an ANN to see if it's providing even better predictions.

That's as easy as the "basic machine learning pipeline". Just import the respective functions and classes:

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

2025-06-17 17:46:51.623493: I tensorflow/core/platform/cpu_feature_guard.cc:210] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations. To enable the following instructions: AVX2 FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.

Define a simple ANN with 4 layers:

model = keras.Sequential()

model.add(layers.Input(shape=data[1].shape))

model.add(layers.Dense(100, activation="relu", kernel_initializer='he_normal', bias_initializer='zeros'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.5))

model.add(layers.Dense(50, activation="relu"))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.5))

model.add(layers.Dense(25, activation="relu"))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.5))

model.add(layers.Dense(len(labels.unique()), activation='softmax'))

model.compile(loss='sparse_categorical_crossentropy',

optimizer='adam',

metrics=['accuracy'])

Split the data into train and test again:

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(data, pd.Categorical(labels).codes, test_size=0.2, shuffle=True)

and train your model:

%time fit = model.fit(X_train, y_train, epochs=300, batch_size=20, validation_split=0.2)

Epoch 1/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 5s 123ms/step - accuracy: 0.1772 - loss: 2.7513 - val_accuracy: 0.0800 - val_loss: 1.7977 Epoch 2/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.1637 - loss: 2.6742 - val_accuracy: 0.1600 - val_loss: 1.8318 Epoch 3/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.1447 - loss: 2.4660 - val_accuracy: 0.2000 - val_loss: 1.8271 Epoch 4/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.2952 - loss: 2.2985 - val_accuracy: 0.2000 - val_loss: 1.8011 Epoch 5/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 35ms/step - accuracy: 0.2878 - loss: 2.2132 - val_accuracy: 0.2000 - val_loss: 1.7646 Epoch 6/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.2835 - loss: 1.9437 - val_accuracy: 0.2800 - val_loss: 1.7387 Epoch 7/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.3140 - loss: 1.8654 - val_accuracy: 0.2800 - val_loss: 1.7189 Epoch 8/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.2794 - loss: 1.9797 - val_accuracy: 0.2800 - val_loss: 1.6999 Epoch 9/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 39ms/step - accuracy: 0.3888 - loss: 1.9332 - val_accuracy: 0.3200 - val_loss: 1.6898 Epoch 10/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 34ms/step - accuracy: 0.4483 - loss: 1.7435 - val_accuracy: 0.3200 - val_loss: 1.6741 Epoch 11/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 40ms/step - accuracy: 0.3392 - loss: 1.7879 - val_accuracy: 0.3200 - val_loss: 1.6630 Epoch 12/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 33ms/step - accuracy: 0.2581 - loss: 1.9323 - val_accuracy: 0.3200 - val_loss: 1.6404 Epoch 13/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 34ms/step - accuracy: 0.3007 - loss: 1.8283 - val_accuracy: 0.3200 - val_loss: 1.6138 Epoch 14/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.3844 - loss: 1.6284 - val_accuracy: 0.3600 - val_loss: 1.5779 Epoch 15/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.5311 - loss: 1.4283 - val_accuracy: 0.4000 - val_loss: 1.5459 Epoch 16/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.3965 - loss: 1.6158 - val_accuracy: 0.4400 - val_loss: 1.5186 Epoch 17/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.5235 - loss: 1.4134 - val_accuracy: 0.4800 - val_loss: 1.4948 Epoch 18/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.4029 - loss: 1.6265 - val_accuracy: 0.5600 - val_loss: 1.4720 Epoch 19/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.4500 - loss: 1.5120 - val_accuracy: 0.6000 - val_loss: 1.4552 Epoch 20/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.3627 - loss: 1.5953 - val_accuracy: 0.6000 - val_loss: 1.4316 Epoch 21/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.4187 - loss: 1.6154 - val_accuracy: 0.5600 - val_loss: 1.4201 Epoch 22/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.3766 - loss: 1.6040 - val_accuracy: 0.5600 - val_loss: 1.4117 Epoch 23/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.2851 - loss: 1.5908 - val_accuracy: 0.5600 - val_loss: 1.4040 Epoch 24/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.4138 - loss: 1.4599 - val_accuracy: 0.5600 - val_loss: 1.3880 Epoch 25/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.4581 - loss: 1.2933 - val_accuracy: 0.5200 - val_loss: 1.3633 Epoch 26/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.4581 - loss: 1.2729 - val_accuracy: 0.5200 - val_loss: 1.3401 Epoch 27/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.5618 - loss: 1.1328 - val_accuracy: 0.5200 - val_loss: 1.3261 Epoch 28/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.5874 - loss: 1.2366 - val_accuracy: 0.6000 - val_loss: 1.3088 Epoch 29/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.5983 - loss: 1.1358 - val_accuracy: 0.6000 - val_loss: 1.2960 Epoch 30/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.5892 - loss: 1.1366 - val_accuracy: 0.6000 - val_loss: 1.2988 Epoch 31/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 35ms/step - accuracy: 0.5724 - loss: 1.1277 - val_accuracy: 0.6400 - val_loss: 1.2973 Epoch 32/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.5227 - loss: 1.1174 - val_accuracy: 0.6400 - val_loss: 1.2808 Epoch 33/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.5585 - loss: 1.0932 - val_accuracy: 0.6400 - val_loss: 1.2613 Epoch 34/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.5715 - loss: 1.0680 - val_accuracy: 0.6400 - val_loss: 1.2398 Epoch 35/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.5610 - loss: 1.0196 - val_accuracy: 0.6400 - val_loss: 1.2213 Epoch 36/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.5802 - loss: 1.1671 - val_accuracy: 0.6400 - val_loss: 1.2033 Epoch 37/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 34ms/step - accuracy: 0.6257 - loss: 0.9556 - val_accuracy: 0.6400 - val_loss: 1.1890 Epoch 38/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.6424 - loss: 0.9940 - val_accuracy: 0.6400 - val_loss: 1.1815 Epoch 39/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 33ms/step - accuracy: 0.5371 - loss: 1.0191 - val_accuracy: 0.6400 - val_loss: 1.1647 Epoch 40/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.5751 - loss: 1.2073 - val_accuracy: 0.6800 - val_loss: 1.1485 Epoch 41/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.6775 - loss: 0.9144 - val_accuracy: 0.6000 - val_loss: 1.1134 Epoch 42/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.6668 - loss: 0.9838 - val_accuracy: 0.6000 - val_loss: 1.0894 Epoch 43/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.7303 - loss: 0.8039 - val_accuracy: 0.6000 - val_loss: 1.0771 Epoch 44/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.6431 - loss: 0.8842 - val_accuracy: 0.6000 - val_loss: 1.0863 Epoch 45/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.6926 - loss: 0.8289 - val_accuracy: 0.5600 - val_loss: 1.0954 Epoch 46/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.6555 - loss: 1.0113 - val_accuracy: 0.5200 - val_loss: 1.0950 Epoch 47/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.6919 - loss: 0.7848 - val_accuracy: 0.5200 - val_loss: 1.0961 Epoch 48/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.7216 - loss: 0.7579 - val_accuracy: 0.5600 - val_loss: 1.0868 Epoch 49/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.6602 - loss: 0.8519 - val_accuracy: 0.6000 - val_loss: 1.0731 Epoch 50/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.5469 - loss: 1.0536 - val_accuracy: 0.6000 - val_loss: 1.0624 Epoch 51/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 33ms/step - accuracy: 0.7789 - loss: 0.7038 - val_accuracy: 0.6400 - val_loss: 1.0573 Epoch 52/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.8068 - loss: 0.6971 - val_accuracy: 0.6400 - val_loss: 1.0518 Epoch 53/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.6393 - loss: 0.8736 - val_accuracy: 0.7200 - val_loss: 1.0535 Epoch 54/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.7366 - loss: 0.7970 - val_accuracy: 0.6800 - val_loss: 1.0544 Epoch 55/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.7495 - loss: 0.7462 - val_accuracy: 0.6400 - val_loss: 1.0599 Epoch 56/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.8051 - loss: 0.6466 - val_accuracy: 0.6800 - val_loss: 1.0556 Epoch 57/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.7738 - loss: 0.7408 - val_accuracy: 0.7200 - val_loss: 1.0435 Epoch 58/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.8215 - loss: 0.6844 - val_accuracy: 0.7200 - val_loss: 1.0049 Epoch 59/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.7712 - loss: 0.6838 - val_accuracy: 0.7200 - val_loss: 0.9766 Epoch 60/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.7499 - loss: 0.6911 - val_accuracy: 0.6800 - val_loss: 0.9532 Epoch 61/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.7701 - loss: 0.6356 - val_accuracy: 0.6800 - val_loss: 0.9267 Epoch 62/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.7946 - loss: 0.6589 - val_accuracy: 0.6800 - val_loss: 0.9164 Epoch 63/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 36ms/step - accuracy: 0.8128 - loss: 0.5685 - val_accuracy: 0.7200 - val_loss: 0.9129 Epoch 64/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.7485 - loss: 0.8008 - val_accuracy: 0.7200 - val_loss: 0.9032 Epoch 65/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.7494 - loss: 0.6753 - val_accuracy: 0.6800 - val_loss: 0.9114 Epoch 66/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.8256 - loss: 0.5654 - val_accuracy: 0.6800 - val_loss: 0.9180 Epoch 67/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.7361 - loss: 0.7065 - val_accuracy: 0.6400 - val_loss: 0.9252 Epoch 68/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.7492 - loss: 0.6729 - val_accuracy: 0.7200 - val_loss: 0.9019 Epoch 69/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.7291 - loss: 0.6714 - val_accuracy: 0.7200 - val_loss: 0.8647 Epoch 70/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.7634 - loss: 0.6605 - val_accuracy: 0.7600 - val_loss: 0.8531 Epoch 71/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.7353 - loss: 0.6661 - val_accuracy: 0.7200 - val_loss: 0.8516 Epoch 72/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.7536 - loss: 0.6632 - val_accuracy: 0.7200 - val_loss: 0.8419 Epoch 73/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.7127 - loss: 0.7028 - val_accuracy: 0.7200 - val_loss: 0.8314 Epoch 74/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.7630 - loss: 0.6544 - val_accuracy: 0.7200 - val_loss: 0.8372 Epoch 75/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 33ms/step - accuracy: 0.8218 - loss: 0.5730 - val_accuracy: 0.7200 - val_loss: 0.8526 Epoch 76/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.7896 - loss: 0.6575 - val_accuracy: 0.7200 - val_loss: 0.8492 Epoch 77/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.8095 - loss: 0.6107 - val_accuracy: 0.7200 - val_loss: 0.8284 Epoch 78/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.8321 - loss: 0.4874 - val_accuracy: 0.7200 - val_loss: 0.7997 Epoch 79/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.8877 - loss: 0.4820 - val_accuracy: 0.6800 - val_loss: 0.7995 Epoch 80/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.7737 - loss: 0.5459 - val_accuracy: 0.6800 - val_loss: 0.8253 Epoch 81/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.8095 - loss: 0.5619 - val_accuracy: 0.6800 - val_loss: 0.8554 Epoch 82/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.8461 - loss: 0.5115 - val_accuracy: 0.6800 - val_loss: 0.8761 Epoch 83/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.8219 - loss: 0.4868 - val_accuracy: 0.6400 - val_loss: 0.8954 Epoch 84/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.8304 - loss: 0.4401 - val_accuracy: 0.6400 - val_loss: 0.9483 Epoch 85/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.8081 - loss: 0.5562 - val_accuracy: 0.6000 - val_loss: 0.9943 Epoch 86/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.8561 - loss: 0.4915 - val_accuracy: 0.5600 - val_loss: 1.0129 Epoch 87/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.8063 - loss: 0.6314 - val_accuracy: 0.5600 - val_loss: 1.0145 Epoch 88/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.8174 - loss: 0.5143 - val_accuracy: 0.5600 - val_loss: 1.0168 Epoch 89/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.8812 - loss: 0.4885 - val_accuracy: 0.6000 - val_loss: 1.0476 Epoch 90/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 34ms/step - accuracy: 0.8504 - loss: 0.4935 - val_accuracy: 0.5600 - val_loss: 1.0384 Epoch 91/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.7496 - loss: 0.6084 - val_accuracy: 0.5600 - val_loss: 1.0454 Epoch 92/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.8259 - loss: 0.6066 - val_accuracy: 0.6000 - val_loss: 1.0338 Epoch 93/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 33ms/step - accuracy: 0.8458 - loss: 0.5114 - val_accuracy: 0.5600 - val_loss: 1.0359 Epoch 94/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 37ms/step - accuracy: 0.8437 - loss: 0.4614 - val_accuracy: 0.6000 - val_loss: 1.0287 Epoch 95/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.9089 - loss: 0.4450 - val_accuracy: 0.5600 - val_loss: 1.0255 Epoch 96/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.8757 - loss: 0.4630 - val_accuracy: 0.5200 - val_loss: 1.0464 Epoch 97/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.8430 - loss: 0.4633 - val_accuracy: 0.4800 - val_loss: 1.0547 Epoch 98/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.8742 - loss: 0.4082 - val_accuracy: 0.5200 - val_loss: 1.0557 Epoch 99/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 34ms/step - accuracy: 0.9320 - loss: 0.3964 - val_accuracy: 0.6000 - val_loss: 1.0298 Epoch 100/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.8191 - loss: 0.5101 - val_accuracy: 0.6000 - val_loss: 1.0125 Epoch 101/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.8868 - loss: 0.4295 - val_accuracy: 0.6000 - val_loss: 0.9926 Epoch 102/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.8647 - loss: 0.4807 - val_accuracy: 0.6000 - val_loss: 0.9723 Epoch 103/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.8227 - loss: 0.5504 - val_accuracy: 0.5600 - val_loss: 0.9680 Epoch 104/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 36ms/step - accuracy: 0.8648 - loss: 0.3469 - val_accuracy: 0.5600 - val_loss: 1.0258 Epoch 105/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 35ms/step - accuracy: 0.8984 - loss: 0.3746 - val_accuracy: 0.5600 - val_loss: 1.0483 Epoch 106/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.7998 - loss: 0.5404 - val_accuracy: 0.5600 - val_loss: 1.0162 Epoch 107/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.7889 - loss: 0.5082 - val_accuracy: 0.6000 - val_loss: 0.9596 Epoch 108/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.7954 - loss: 0.4958 - val_accuracy: 0.6400 - val_loss: 0.9244 Epoch 109/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.8731 - loss: 0.4517 - val_accuracy: 0.6400 - val_loss: 0.9351 Epoch 110/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.9017 - loss: 0.3787 - val_accuracy: 0.6400 - val_loss: 0.9415 Epoch 111/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.8913 - loss: 0.3984 - val_accuracy: 0.6800 - val_loss: 0.9310 Epoch 112/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.9492 - loss: 0.3461 - val_accuracy: 0.6800 - val_loss: 0.9287 Epoch 113/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.8451 - loss: 0.4681 - val_accuracy: 0.6800 - val_loss: 0.9097 Epoch 114/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.8943 - loss: 0.4140 - val_accuracy: 0.6800 - val_loss: 0.9044 Epoch 115/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.8130 - loss: 0.4715 - val_accuracy: 0.6800 - val_loss: 0.9075 Epoch 116/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.9051 - loss: 0.3575 - val_accuracy: 0.6800 - val_loss: 0.9037 Epoch 117/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.7668 - loss: 0.4677 - val_accuracy: 0.6800 - val_loss: 0.8970 Epoch 118/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9153 - loss: 0.3547 - val_accuracy: 0.6800 - val_loss: 0.8921 Epoch 119/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.8774 - loss: 0.3541 - val_accuracy: 0.6800 - val_loss: 0.8671 Epoch 120/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.7839 - loss: 0.5486 - val_accuracy: 0.6800 - val_loss: 0.8425 Epoch 121/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9037 - loss: 0.3771 - val_accuracy: 0.7200 - val_loss: 0.7855 Epoch 122/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.9341 - loss: 0.3761 - val_accuracy: 0.7200 - val_loss: 0.7622 Epoch 123/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.8313 - loss: 0.4784 - val_accuracy: 0.7200 - val_loss: 0.7514 Epoch 124/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9209 - loss: 0.3360 - val_accuracy: 0.7200 - val_loss: 0.7394 Epoch 125/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.8948 - loss: 0.3435 - val_accuracy: 0.7200 - val_loss: 0.7264 Epoch 126/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.8412 - loss: 0.4011 - val_accuracy: 0.6800 - val_loss: 0.7237 Epoch 127/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.8431 - loss: 0.3810 - val_accuracy: 0.6800 - val_loss: 0.7309 Epoch 128/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 34ms/step - accuracy: 0.9693 - loss: 0.2410 - val_accuracy: 0.6800 - val_loss: 0.7422 Epoch 129/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.8197 - loss: 0.4111 - val_accuracy: 0.6800 - val_loss: 0.7478 Epoch 130/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9651 - loss: 0.2351 - val_accuracy: 0.6800 - val_loss: 0.7547 Epoch 131/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 33ms/step - accuracy: 0.9149 - loss: 0.3156 - val_accuracy: 0.6800 - val_loss: 0.7638 Epoch 132/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 57ms/step - accuracy: 0.9092 - loss: 0.3236 - val_accuracy: 0.6800 - val_loss: 0.7774 Epoch 133/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 34ms/step - accuracy: 0.9022 - loss: 0.3351 - val_accuracy: 0.6800 - val_loss: 0.7838 Epoch 134/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.9255 - loss: 0.2611 - val_accuracy: 0.7200 - val_loss: 0.7795 Epoch 135/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9532 - loss: 0.2641 - val_accuracy: 0.6800 - val_loss: 0.7739 Epoch 136/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.9291 - loss: 0.3012 - val_accuracy: 0.6800 - val_loss: 0.7709 Epoch 137/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.8692 - loss: 0.3155 - val_accuracy: 0.6800 - val_loss: 0.7739 Epoch 138/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 34ms/step - accuracy: 0.9340 - loss: 0.2040 - val_accuracy: 0.6800 - val_loss: 0.7759 Epoch 139/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9700 - loss: 0.1870 - val_accuracy: 0.7200 - val_loss: 0.7776 Epoch 140/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9129 - loss: 0.2664 - val_accuracy: 0.7200 - val_loss: 0.7917 Epoch 141/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.8569 - loss: 0.3985 - val_accuracy: 0.7200 - val_loss: 0.8020 Epoch 142/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9405 - loss: 0.2604 - val_accuracy: 0.6800 - val_loss: 0.8247 Epoch 143/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.8755 - loss: 0.3260 - val_accuracy: 0.6800 - val_loss: 0.8394 Epoch 144/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9063 - loss: 0.2936 - val_accuracy: 0.6800 - val_loss: 0.8589 Epoch 145/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.9512 - loss: 0.2065 - val_accuracy: 0.6800 - val_loss: 0.8632 Epoch 146/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 38ms/step - accuracy: 0.8704 - loss: 0.3388 - val_accuracy: 0.6800 - val_loss: 0.8728 Epoch 147/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 43ms/step - accuracy: 0.8993 - loss: 0.3323 - val_accuracy: 0.6800 - val_loss: 0.8993 Epoch 148/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 36ms/step - accuracy: 0.8136 - loss: 0.3787 - val_accuracy: 0.6800 - val_loss: 0.9645 Epoch 149/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 33ms/step - accuracy: 0.9460 - loss: 0.2426 - val_accuracy: 0.6000 - val_loss: 1.0498 Epoch 150/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 34ms/step - accuracy: 0.9373 - loss: 0.2840 - val_accuracy: 0.6000 - val_loss: 1.1166 Epoch 151/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.8494 - loss: 0.4648 - val_accuracy: 0.6000 - val_loss: 1.1434 Epoch 152/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9547 - loss: 0.2184 - val_accuracy: 0.6000 - val_loss: 1.1332 Epoch 153/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9258 - loss: 0.2883 - val_accuracy: 0.6000 - val_loss: 1.1108 Epoch 154/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.9519 - loss: 0.2997 - val_accuracy: 0.6000 - val_loss: 1.0560 Epoch 155/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9174 - loss: 0.2414 - val_accuracy: 0.6400 - val_loss: 0.9705 Epoch 156/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.9842 - loss: 0.2085 - val_accuracy: 0.6400 - val_loss: 0.9280 Epoch 157/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.9159 - loss: 0.1888 - val_accuracy: 0.6400 - val_loss: 0.9375 Epoch 158/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.9711 - loss: 0.2359 - val_accuracy: 0.6400 - val_loss: 0.9731 Epoch 159/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.9767 - loss: 0.1685 - val_accuracy: 0.6400 - val_loss: 1.0009 Epoch 160/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 33ms/step - accuracy: 0.9182 - loss: 0.2629 - val_accuracy: 0.6400 - val_loss: 1.0089 Epoch 161/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 34ms/step - accuracy: 0.9445 - loss: 0.2349 - val_accuracy: 0.6400 - val_loss: 1.0081 Epoch 162/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 33ms/step - accuracy: 0.9017 - loss: 0.2919 - val_accuracy: 0.6400 - val_loss: 1.0142 Epoch 163/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.9034 - loss: 0.2646 - val_accuracy: 0.6400 - val_loss: 0.9902 Epoch 164/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.8926 - loss: 0.3683 - val_accuracy: 0.6400 - val_loss: 0.9572 Epoch 165/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.9175 - loss: 0.2830 - val_accuracy: 0.6400 - val_loss: 0.9542 Epoch 166/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.9412 - loss: 0.2244 - val_accuracy: 0.6400 - val_loss: 0.9711 Epoch 167/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 34ms/step - accuracy: 0.9166 - loss: 0.3995 - val_accuracy: 0.6800 - val_loss: 0.9858 Epoch 168/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.9477 - loss: 0.2016 - val_accuracy: 0.6800 - val_loss: 0.9794 Epoch 169/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9490 - loss: 0.2377 - val_accuracy: 0.6800 - val_loss: 0.9729 Epoch 170/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9706 - loss: 0.1854 - val_accuracy: 0.6800 - val_loss: 0.9803 Epoch 171/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.9562 - loss: 0.1973 - val_accuracy: 0.6800 - val_loss: 1.0029 Epoch 172/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9700 - loss: 0.1371 - val_accuracy: 0.6800 - val_loss: 1.0113 Epoch 173/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.9461 - loss: 0.2360 - val_accuracy: 0.6400 - val_loss: 1.0066 Epoch 174/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.9375 - loss: 0.1861 - val_accuracy: 0.6400 - val_loss: 0.9992 Epoch 175/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 41ms/step - accuracy: 0.8762 - loss: 0.2695 - val_accuracy: 0.6800 - val_loss: 1.0023 Epoch 176/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.9263 - loss: 0.2599 - val_accuracy: 0.6800 - val_loss: 1.0022 Epoch 177/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.9218 - loss: 0.2806 - val_accuracy: 0.6800 - val_loss: 1.0048 Epoch 178/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 34ms/step - accuracy: 0.9395 - loss: 0.1944 - val_accuracy: 0.6800 - val_loss: 0.9972 Epoch 179/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 38ms/step - accuracy: 0.9493 - loss: 0.2034 - val_accuracy: 0.6800 - val_loss: 0.9737 Epoch 180/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.8004 - loss: 0.4326 - val_accuracy: 0.6800 - val_loss: 0.9698 Epoch 181/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.9569 - loss: 0.2538 - val_accuracy: 0.6400 - val_loss: 0.9254 Epoch 182/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 33ms/step - accuracy: 0.9725 - loss: 0.1656 - val_accuracy: 0.6400 - val_loss: 0.9090 Epoch 183/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.9498 - loss: 0.2122 - val_accuracy: 0.6000 - val_loss: 0.9085 Epoch 184/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.9542 - loss: 0.1859 - val_accuracy: 0.6000 - val_loss: 0.9054 Epoch 185/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.9884 - loss: 0.1414 - val_accuracy: 0.6400 - val_loss: 0.9230 Epoch 186/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.9389 - loss: 0.2111 - val_accuracy: 0.6400 - val_loss: 0.9680 Epoch 187/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.9670 - loss: 0.1651 - val_accuracy: 0.6000 - val_loss: 1.0490 Epoch 188/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 44ms/step - accuracy: 0.9493 - loss: 0.1902 - val_accuracy: 0.5600 - val_loss: 1.0592 Epoch 189/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 33ms/step - accuracy: 0.9262 - loss: 0.3474 - val_accuracy: 0.5600 - val_loss: 1.0579 Epoch 190/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 39ms/step - accuracy: 0.9283 - loss: 0.2600 - val_accuracy: 0.5600 - val_loss: 1.0809 Epoch 191/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.9644 - loss: 0.1741 - val_accuracy: 0.5600 - val_loss: 1.0929 Epoch 192/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 35ms/step - accuracy: 0.9721 - loss: 0.1497 - val_accuracy: 0.5600 - val_loss: 1.1117 Epoch 193/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 37ms/step - accuracy: 0.9842 - loss: 0.1281 - val_accuracy: 0.5200 - val_loss: 1.1596 Epoch 194/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 33ms/step - accuracy: 0.9428 - loss: 0.1917 - val_accuracy: 0.5200 - val_loss: 1.2060 Epoch 195/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.9218 - loss: 0.2193 - val_accuracy: 0.5200 - val_loss: 1.2219 Epoch 196/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.9809 - loss: 0.1424 - val_accuracy: 0.5200 - val_loss: 1.2030 Epoch 197/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9249 - loss: 0.2332 - val_accuracy: 0.5200 - val_loss: 1.2049 Epoch 198/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9532 - loss: 0.1658 - val_accuracy: 0.5200 - val_loss: 1.2085 Epoch 199/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9933 - loss: 0.1213 - val_accuracy: 0.5200 - val_loss: 1.2210 Epoch 200/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9809 - loss: 0.1374 - val_accuracy: 0.5600 - val_loss: 1.2298 Epoch 201/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9698 - loss: 0.1493 - val_accuracy: 0.5600 - val_loss: 1.2291 Epoch 202/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.9793 - loss: 0.1445 - val_accuracy: 0.5600 - val_loss: 1.2328 Epoch 203/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9891 - loss: 0.0996 - val_accuracy: 0.5600 - val_loss: 1.2087 Epoch 204/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9759 - loss: 0.1854 - val_accuracy: 0.5600 - val_loss: 1.1759 Epoch 205/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.9876 - loss: 0.1142 - val_accuracy: 0.5600 - val_loss: 1.1498 Epoch 206/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9803 - loss: 0.1168 - val_accuracy: 0.5600 - val_loss: 1.1397 Epoch 207/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9387 - loss: 0.1620 - val_accuracy: 0.5600 - val_loss: 1.1585 Epoch 208/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 1.0000 - loss: 0.1242 - val_accuracy: 0.5600 - val_loss: 1.1746 Epoch 209/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.9335 - loss: 0.2594 - val_accuracy: 0.5600 - val_loss: 1.1809 Epoch 210/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9263 - loss: 0.2466 - val_accuracy: 0.5200 - val_loss: 1.2428 Epoch 211/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.9444 - loss: 0.2149 - val_accuracy: 0.5600 - val_loss: 1.2445 Epoch 212/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.9582 - loss: 0.1815 - val_accuracy: 0.6000 - val_loss: 1.2124 Epoch 213/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.9497 - loss: 0.1944 - val_accuracy: 0.5600 - val_loss: 1.2016 Epoch 214/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.9796 - loss: 0.1504 - val_accuracy: 0.5600 - val_loss: 1.2013 Epoch 215/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9403 - loss: 0.1578 - val_accuracy: 0.5600 - val_loss: 1.2598 Epoch 216/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9503 - loss: 0.2754 - val_accuracy: 0.6400 - val_loss: 1.2682 Epoch 217/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9803 - loss: 0.1270 - val_accuracy: 0.6000 - val_loss: 1.3079 Epoch 218/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9380 - loss: 0.1947 - val_accuracy: 0.6000 - val_loss: 1.3696 Epoch 219/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9589 - loss: 0.1368 - val_accuracy: 0.6000 - val_loss: 1.4374 Epoch 220/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 36ms/step - accuracy: 0.9863 - loss: 0.1238 - val_accuracy: 0.5600 - val_loss: 1.5153 Epoch 221/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.9738 - loss: 0.1320 - val_accuracy: 0.5600 - val_loss: 1.5700 Epoch 222/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.9290 - loss: 0.1466 - val_accuracy: 0.5600 - val_loss: 1.6022 Epoch 223/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9009 - loss: 0.2581 - val_accuracy: 0.5600 - val_loss: 1.5669 Epoch 224/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9487 - loss: 0.1508 - val_accuracy: 0.5600 - val_loss: 1.5068 Epoch 225/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9290 - loss: 0.2018 - val_accuracy: 0.5600 - val_loss: 1.5059 Epoch 226/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9809 - loss: 0.1095 - val_accuracy: 0.5600 - val_loss: 1.4921 Epoch 227/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9705 - loss: 0.1448 - val_accuracy: 0.5600 - val_loss: 1.4641 Epoch 228/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9721 - loss: 0.1497 - val_accuracy: 0.6000 - val_loss: 1.4620 Epoch 229/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.9507 - loss: 0.1821 - val_accuracy: 0.6000 - val_loss: 1.4386 Epoch 230/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.8753 - loss: 0.3179 - val_accuracy: 0.5200 - val_loss: 1.3097 Epoch 231/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9553 - loss: 0.1443 - val_accuracy: 0.6000 - val_loss: 1.2327 Epoch 232/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9769 - loss: 0.1224 - val_accuracy: 0.6000 - val_loss: 1.1621 Epoch 233/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9461 - loss: 0.2060 - val_accuracy: 0.6400 - val_loss: 1.1107 Epoch 234/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9754 - loss: 0.1321 - val_accuracy: 0.6400 - val_loss: 1.0963 Epoch 235/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.9588 - loss: 0.1411 - val_accuracy: 0.6800 - val_loss: 1.0265 Epoch 236/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9601 - loss: 0.1325 - val_accuracy: 0.6400 - val_loss: 0.9242 Epoch 237/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 70ms/step - accuracy: 0.9775 - loss: 0.1257 - val_accuracy: 0.6400 - val_loss: 0.8814 Epoch 238/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.9822 - loss: 0.1151 - val_accuracy: 0.6400 - val_loss: 0.8611 Epoch 239/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.9601 - loss: 0.1137 - val_accuracy: 0.6400 - val_loss: 0.8620 Epoch 240/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 33ms/step - accuracy: 0.9366 - loss: 0.1873 - val_accuracy: 0.6800 - val_loss: 0.8792 Epoch 241/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9223 - loss: 0.2301 - val_accuracy: 0.6400 - val_loss: 0.9009 Epoch 242/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.9635 - loss: 0.1440 - val_accuracy: 0.6400 - val_loss: 0.9072 Epoch 243/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9876 - loss: 0.1451 - val_accuracy: 0.6400 - val_loss: 0.9101 Epoch 244/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.9733 - loss: 0.1613 - val_accuracy: 0.6000 - val_loss: 0.8990 Epoch 245/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 23ms/step - accuracy: 0.9504 - loss: 0.1472 - val_accuracy: 0.6000 - val_loss: 0.8861 Epoch 246/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 23ms/step - accuracy: 0.9752 - loss: 0.1231 - val_accuracy: 0.6400 - val_loss: 0.8603 Epoch 247/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 23ms/step - accuracy: 0.9254 - loss: 0.2222 - val_accuracy: 0.6800 - val_loss: 0.8459 Epoch 248/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.9256 - loss: 0.2066 - val_accuracy: 0.6800 - val_loss: 0.8472 Epoch 249/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.9492 - loss: 0.2036 - val_accuracy: 0.7200 - val_loss: 0.8513 Epoch 250/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.9891 - loss: 0.0615 - val_accuracy: 0.7200 - val_loss: 0.8615 Epoch 251/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 1.0000 - loss: 0.1354 - val_accuracy: 0.6400 - val_loss: 0.8754 Epoch 252/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9408 - loss: 0.1365 - val_accuracy: 0.6800 - val_loss: 0.8683 Epoch 253/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9754 - loss: 0.1071 - val_accuracy: 0.6800 - val_loss: 0.8571 Epoch 254/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9863 - loss: 0.1441 - val_accuracy: 0.6800 - val_loss: 0.8248 Epoch 255/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9793 - loss: 0.0837 - val_accuracy: 0.6800 - val_loss: 0.8185 Epoch 256/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9601 - loss: 0.1215 - val_accuracy: 0.6800 - val_loss: 0.8277 Epoch 257/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9739 - loss: 0.1031 - val_accuracy: 0.6400 - val_loss: 0.8363 Epoch 258/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9760 - loss: 0.1191 - val_accuracy: 0.6800 - val_loss: 0.8115 Epoch 259/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9966 - loss: 0.0838 - val_accuracy: 0.7200 - val_loss: 0.7921 Epoch 260/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9850 - loss: 0.0806 - val_accuracy: 0.7600 - val_loss: 0.7687 Epoch 261/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.9669 - loss: 0.1452 - val_accuracy: 0.8000 - val_loss: 0.7469 Epoch 262/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9945 - loss: 0.0806 - val_accuracy: 0.7600 - val_loss: 0.7396 Epoch 263/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9180 - loss: 0.2361 - val_accuracy: 0.7200 - val_loss: 0.7498 Epoch 264/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9809 - loss: 0.1068 - val_accuracy: 0.7200 - val_loss: 0.7608 Epoch 265/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9884 - loss: 0.1189 - val_accuracy: 0.6800 - val_loss: 0.7716 Epoch 266/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.9891 - loss: 0.0939 - val_accuracy: 0.6800 - val_loss: 0.8052 Epoch 267/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.9586 - loss: 0.1093 - val_accuracy: 0.6800 - val_loss: 0.8128 Epoch 268/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9581 - loss: 0.1308 - val_accuracy: 0.6800 - val_loss: 0.8458 Epoch 269/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9490 - loss: 0.1269 - val_accuracy: 0.6800 - val_loss: 0.8428 Epoch 270/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9629 - loss: 0.0915 - val_accuracy: 0.6800 - val_loss: 0.8552 Epoch 271/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9574 - loss: 0.1456 - val_accuracy: 0.6800 - val_loss: 0.9069 Epoch 272/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9594 - loss: 0.0873 - val_accuracy: 0.6800 - val_loss: 0.9523 Epoch 273/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9793 - loss: 0.1129 - val_accuracy: 0.6800 - val_loss: 0.9951 Epoch 274/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9876 - loss: 0.0748 - val_accuracy: 0.6800 - val_loss: 1.0009 Epoch 275/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9878 - loss: 0.0679 - val_accuracy: 0.6800 - val_loss: 0.9950 Epoch 276/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9878 - loss: 0.0949 - val_accuracy: 0.6800 - val_loss: 0.9799 Epoch 277/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 72ms/step - accuracy: 0.9793 - loss: 0.0665 - val_accuracy: 0.6800 - val_loss: 0.9296 Epoch 278/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 32ms/step - accuracy: 0.9714 - loss: 0.1172 - val_accuracy: 0.6800 - val_loss: 0.9079 Epoch 279/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9532 - loss: 0.1376 - val_accuracy: 0.6800 - val_loss: 0.9363 Epoch 280/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9830 - loss: 0.0943 - val_accuracy: 0.6800 - val_loss: 0.9509 Epoch 281/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9794 - loss: 0.0784 - val_accuracy: 0.6800 - val_loss: 0.9197 Epoch 282/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9643 - loss: 0.1283 - val_accuracy: 0.6800 - val_loss: 0.9123 Epoch 283/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9876 - loss: 0.1319 - val_accuracy: 0.6800 - val_loss: 0.9163 Epoch 284/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.9160 - loss: 0.1717 - val_accuracy: 0.6800 - val_loss: 0.9543 Epoch 285/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 30ms/step - accuracy: 0.9918 - loss: 0.0358 - val_accuracy: 0.6400 - val_loss: 0.9807 Epoch 286/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.9850 - loss: 0.0692 - val_accuracy: 0.6400 - val_loss: 0.9801 Epoch 287/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9656 - loss: 0.1161 - val_accuracy: 0.6800 - val_loss: 0.9725 Epoch 288/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 25ms/step - accuracy: 0.9534 - loss: 0.1510 - val_accuracy: 0.6800 - val_loss: 0.9662 Epoch 289/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 24ms/step - accuracy: 0.9781 - loss: 0.0988 - val_accuracy: 0.7200 - val_loss: 0.9126 Epoch 290/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9768 - loss: 0.0931 - val_accuracy: 0.7200 - val_loss: 0.9003 Epoch 291/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9484 - loss: 0.1763 - val_accuracy: 0.7200 - val_loss: 0.9239 Epoch 292/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 1.0000 - loss: 0.0597 - val_accuracy: 0.6400 - val_loss: 1.1420 Epoch 293/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9788 - loss: 0.0933 - val_accuracy: 0.6400 - val_loss: 1.2226 Epoch 294/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9615 - loss: 0.1950 - val_accuracy: 0.6400 - val_loss: 1.2819 Epoch 295/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9656 - loss: 0.0880 - val_accuracy: 0.6400 - val_loss: 1.3293 Epoch 296/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step - accuracy: 0.9733 - loss: 0.1183 - val_accuracy: 0.6000 - val_loss: 1.3543 Epoch 297/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 28ms/step - accuracy: 0.9788 - loss: 0.0733 - val_accuracy: 0.6400 - val_loss: 1.3087 Epoch 298/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step - accuracy: 0.9503 - loss: 0.1295 - val_accuracy: 0.6400 - val_loss: 1.2352 Epoch 299/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.9407 - loss: 0.1359 - val_accuracy: 0.6400 - val_loss: 1.1712 Epoch 300/300 5/5 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - accuracy: 0.9614 - loss: 0.1265 - val_accuracy: 0.6400 - val_loss: 1.1698 CPU times: user 54.6 s, sys: 9.17 s, total: 1min 3s Wall time: 56.2 s 5/5 [==============================] - 0s 11ms/step - loss: 0.6206 - accuracy: 0.8182 - val_loss: 1.1899 - val_accuracy: 0.5200 Epoch 60/300 5/5 [==============================] - 0s 10ms/step - loss: 0.6564 - accuracy: 0.7576 - val_loss: 1.1962 - val_accuracy: 0.5200 Epoch 61/300 5/5 [==============================] - 0s 30ms/step - loss: 0.7866 - accuracy: 0.7172 - val_loss: 1.1957 - val_accuracy: 0.5200 Epoch 62/300 5/5 [==============================] - 0s 10ms/step - loss: 0.6576 - accuracy: 0.7273 - val_loss: 1.2127 - val_accuracy: 0.5200 Epoch 63/300 5/5 [==============================] - 0s 10ms/step - loss: 0.5989 - accuracy: 0.7879 - val_loss: 1.2209 - val_accuracy: 0.5200 Epoch 64/300 5/5 [==============================] - 0s 10ms/step - loss: 0.5561 - accuracy: 0.8283 - val_loss: 1.2014 - val_accuracy: 0.5200 Epoch 65/300 5/5 [==============================] - 0s 10ms/step - loss: 0.5866 - accuracy: 0.8485 - val_loss: 1.1845 - val_accuracy: 0.5200 Epoch 66/300 5/5 [==============================] - 0s 9ms/step - loss: 0.5624 - accuracy: 0.7879 - val_loss: 1.1667 - val_accuracy: 0.5200 Epoch 67/300 5/5 [==============================] - 0s 10ms/step - loss: 0.5939 - accuracy: 0.8182 - val_loss: 1.1534 - val_accuracy: 0.5200 Epoch 68/300 5/5 [==============================] - 0s 10ms/step - loss: 0.6402 - accuracy: 0.7576 - val_loss: 1.1429 - val_accuracy: 0.5200 Epoch 69/300 5/5 [==============================] - 0s 9ms/step - loss: 0.6282 - accuracy: 0.8182 - val_loss: 1.1326 - val_accuracy: 0.5600 Epoch 70/300 5/5 [==============================] - 0s 10ms/step - loss: 0.4443 - accuracy: 0.8788 - val_loss: 1.1170 - val_accuracy: 0.5600 Epoch 71/300 5/5 [==============================] - 0s 9ms/step - loss: 0.4632 - accuracy: 0.8485 - val_loss: 1.1055 - val_accuracy: 0.5600 Epoch 72/300 5/5 [==============================] - 0s 10ms/step - loss: 0.5103 - accuracy: 0.8485 - val_loss: 1.0968 - val_accuracy: 0.5600 Epoch 73/300 5/5 [==============================] - 0s 15ms/step - loss: 0.4771 - accuracy: 0.8889 - val_loss: 1.0730 - val_accuracy: 0.6000 Epoch 74/300 5/5 [==============================] - 0s 10ms/step - loss: 0.5252 - accuracy: 0.8485 - val_loss: 1.0790 - val_accuracy: 0.6400 Epoch 75/300 5/5 [==============================] - 0s 28ms/step - loss: 0.5182 - accuracy: 0.8384 - val_loss: 1.0909 - val_accuracy: 0.6000 Epoch 76/300 5/5 [==============================] - 0s 11ms/step - loss: 0.3781 - accuracy: 0.9091 - val_loss: 1.1057 - val_accuracy: 0.6000 Epoch 77/300 5/5 [==============================] - 0s 16ms/step - loss: 0.4580 - accuracy: 0.8384 - val_loss: 1.1166 - val_accuracy: 0.6000 Epoch 78/300 5/5 [==============================] - 0s 10ms/step - loss: 0.4712 - accuracy: 0.8788 - val_loss: 1.1395 - val_accuracy: 0.6000 Epoch 79/300 5/5 [==============================] - 0s 18ms/step - loss: 0.4269 - accuracy: 0.8586 - val_loss: 1.1721 - val_accuracy: 0.5200 Epoch 80/300 5/5 [==============================] - 0s 10ms/step - loss: 0.4910 - accuracy: 0.7980 - val_loss: 1.1820 - val_accuracy: 0.5200 Epoch 81/300 5/5 [==============================] - 0s 10ms/step - loss: 0.4699 - accuracy: 0.8687 - val_loss: 1.1861 - val_accuracy: 0.5600 Epoch 82/300 5/5 [==============================] - 0s 10ms/step - loss: 0.4194 - accuracy: 0.8788 - val_loss: 1.1940 - val_accuracy: 0.5600 Epoch 83/300 5/5 [==============================] - 0s 10ms/step - loss: 0.4095 - accuracy: 0.8687 - val_loss: 1.1971 - val_accuracy: 0.5600 Epoch 84/300 5/5 [==============================] - 0s 9ms/step - loss: 0.4048 - accuracy: 0.8788 - val_loss: 1.1910 - val_accuracy: 0.5600 Epoch 85/300 5/5 [==============================] - 0s 11ms/step - loss: 0.5509 - accuracy: 0.8081 - val_loss: 1.1904 - val_accuracy: 0.4800 Epoch 86/300 5/5 [==============================] - 0s 10ms/step - loss: 0.4884 - accuracy: 0.8283 - val_loss: 1.1950 - val_accuracy: 0.4800 Epoch 87/300 5/5 [==============================] - 0s 10ms/step - loss: 0.4196 - accuracy: 0.8889 - val_loss: 1.1899 - val_accuracy: 0.5200 Epoch 88/300 5/5 [==============================] - 0s 10ms/step - loss: 0.4157 - accuracy: 0.8485 - val_loss: 1.2238 - val_accuracy: 0.5200 Epoch 89/300 5/5 [==============================] - 0s 9ms/step - loss: 0.4900 - accuracy: 0.8485 - val_loss: 1.2246 - val_accuracy: 0.5200 Epoch 90/300 5/5 [==============================] - 0s 10ms/step - loss: 0.3359 - accuracy: 0.9091 - val_loss: 1.2065 - val_accuracy: 0.5200 Epoch 91/300 5/5 [==============================] - 0s 9ms/step - loss: 0.4218 - accuracy: 0.8788 - val_loss: 1.2021 - val_accuracy: 0.5200 Epoch 92/300 5/5 [==============================] - 0s 9ms/step - loss: 0.5559 - accuracy: 0.8081 - val_loss: 1.2235 - val_accuracy: 0.5200 Epoch 93/300 5/5 [==============================] - 0s 10ms/step - loss: 0.4268 - accuracy: 0.8485 - val_loss: 1.2393 - val_accuracy: 0.5200 Epoch 94/300 5/5 [==============================] - 0s 9ms/step - loss: 0.3674 - accuracy: 0.9293 - val_loss: 1.2544 - val_accuracy: 0.5200 Epoch 95/300 5/5 [==============================] - 0s 10ms/step - loss: 0.3332 - accuracy: 0.9192 - val_loss: 1.2941 - val_accuracy: 0.5200 Epoch 96/300 5/5 [==============================] - 0s 10ms/step - loss: 0.3505 - accuracy: 0.8990 - val_loss: 1.3192 - val_accuracy: 0.5200 Epoch 97/300 5/5 [==============================] - 0s 9ms/step - loss: 0.3442 - accuracy: 0.9091 - val_loss: 1.3132 - val_accuracy: 0.5200 Epoch 98/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2849 - accuracy: 0.9495 - val_loss: 1.2946 - val_accuracy: 0.5200 Epoch 99/300 5/5 [==============================] - 0s 10ms/step - loss: 0.4299 - accuracy: 0.8687 - val_loss: 1.2577 - val_accuracy: 0.5200 Epoch 100/300 5/5 [==============================] - 0s 36ms/step - loss: 0.3711 - accuracy: 0.8788 - val_loss: 1.2366 - val_accuracy: 0.5200 Epoch 101/300 5/5 [==============================] - 0s 10ms/step - loss: 0.4541 - accuracy: 0.8485 - val_loss: 1.2062 - val_accuracy: 0.5200 Epoch 102/300 5/5 [==============================] - 0s 10ms/step - loss: 0.3570 - accuracy: 0.8687 - val_loss: 1.1883 - val_accuracy: 0.5200 Epoch 103/300 5/5 [==============================] - 0s 9ms/step - loss: 0.3579 - accuracy: 0.8990 - val_loss: 1.1813 - val_accuracy: 0.5200 Epoch 104/300 5/5 [==============================] - 0s 9ms/step - loss: 0.2861 - accuracy: 0.9293 - val_loss: 1.1807 - val_accuracy: 0.5200 Epoch 105/300 5/5 [==============================] - 0s 10ms/step - loss: 0.3789 - accuracy: 0.8586 - val_loss: 1.1829 - val_accuracy: 0.5200 Epoch 106/300 5/5 [==============================] - 0s 9ms/step - loss: 0.3457 - accuracy: 0.8990 - val_loss: 1.2079 - val_accuracy: 0.5200 Epoch 107/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2590 - accuracy: 0.9394 - val_loss: 1.2311 - val_accuracy: 0.5200 Epoch 108/300 5/5 [==============================] - 0s 11ms/step - loss: 0.3527 - accuracy: 0.9091 - val_loss: 1.2570 - val_accuracy: 0.5200 Epoch 109/300 5/5 [==============================] - 0s 9ms/step - loss: 0.3358 - accuracy: 0.8889 - val_loss: 1.2947 - val_accuracy: 0.5200 Epoch 110/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2534 - accuracy: 0.9394 - val_loss: 1.3408 - val_accuracy: 0.5200 Epoch 111/300 5/5 [==============================] - 0s 11ms/step - loss: 0.2463 - accuracy: 0.9394 - val_loss: 1.3770 - val_accuracy: 0.5200 Epoch 112/300 5/5 [==============================] - 0s 10ms/step - loss: 0.3498 - accuracy: 0.9091 - val_loss: 1.3980 - val_accuracy: 0.5200 Epoch 113/300 5/5 [==============================] - 0s 10ms/step - loss: 0.3142 - accuracy: 0.9192 - val_loss: 1.3979 - val_accuracy: 0.5200 Epoch 114/300 5/5 [==============================] - 0s 9ms/step - loss: 0.2258 - accuracy: 0.9596 - val_loss: 1.3949 - val_accuracy: 0.5200 Epoch 115/300 5/5 [==============================] - 0s 10ms/step - loss: 0.3503 - accuracy: 0.9192 - val_loss: 1.3928 - val_accuracy: 0.5200 Epoch 116/300 5/5 [==============================] - 0s 9ms/step - loss: 0.3959 - accuracy: 0.8990 - val_loss: 1.3936 - val_accuracy: 0.5200 Epoch 117/300 5/5 [==============================] - 0s 10ms/step - loss: 0.3461 - accuracy: 0.9091 - val_loss: 1.4017 - val_accuracy: 0.5200 Epoch 118/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2749 - accuracy: 0.9293 - val_loss: 1.4229 - val_accuracy: 0.5200 Epoch 119/300 5/5 [==============================] - 0s 9ms/step - loss: 0.2717 - accuracy: 0.9293 - val_loss: 1.4275 - val_accuracy: 0.5200 Epoch 120/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2813 - accuracy: 0.9293 - val_loss: 1.4212 - val_accuracy: 0.5200 Epoch 121/300 5/5 [==============================] - 0s 11ms/step - loss: 0.2984 - accuracy: 0.8889 - val_loss: 1.4083 - val_accuracy: 0.5200 Epoch 122/300 5/5 [==============================] - 0s 12ms/step - loss: 0.3248 - accuracy: 0.9192 - val_loss: 1.3932 - val_accuracy: 0.5200 Epoch 123/300 5/5 [==============================] - 0s 11ms/step - loss: 0.2410 - accuracy: 0.9394 - val_loss: 1.3802 - val_accuracy: 0.5200 Epoch 124/300 5/5 [==============================] - 0s 10ms/step - loss: 0.3152 - accuracy: 0.8889 - val_loss: 1.3707 - val_accuracy: 0.5200 Epoch 125/300 5/5 [==============================] - 0s 11ms/step - loss: 0.3380 - accuracy: 0.8889 - val_loss: 1.3422 - val_accuracy: 0.5200 Epoch 126/300 5/5 [==============================] - 0s 11ms/step - loss: 0.2511 - accuracy: 0.9192 - val_loss: 1.3290 - val_accuracy: 0.5200 Epoch 127/300 5/5 [==============================] - 0s 9ms/step - loss: 0.2840 - accuracy: 0.9192 - val_loss: 1.3449 - val_accuracy: 0.5200 Epoch 128/300 5/5 [==============================] - 0s 11ms/step - loss: 0.2495 - accuracy: 0.9495 - val_loss: 1.3590 - val_accuracy: 0.5200 Epoch 129/300 5/5 [==============================] - 0s 14ms/step - loss: 0.2076 - accuracy: 0.9495 - val_loss: 1.3600 - val_accuracy: 0.5200 Epoch 130/300 5/5 [==============================] - 0s 14ms/step - loss: 0.2681 - accuracy: 0.9394 - val_loss: 1.3473 - val_accuracy: 0.5200 Epoch 131/300 5/5 [==============================] - 0s 16ms/step - loss: 0.2488 - accuracy: 0.9293 - val_loss: 1.3310 - val_accuracy: 0.5200 Epoch 132/300 5/5 [==============================] - 0s 17ms/step - loss: 0.2132 - accuracy: 0.9596 - val_loss: 1.3315 - val_accuracy: 0.5200 Epoch 133/300 5/5 [==============================] - 0s 14ms/step - loss: 0.2855 - accuracy: 0.9192 - val_loss: 1.3284 - val_accuracy: 0.5200 Epoch 134/300 5/5 [==============================] - 0s 56ms/step - loss: 0.2401 - accuracy: 0.9495 - val_loss: 1.3312 - val_accuracy: 0.5200 Epoch 135/300 5/5 [==============================] - 0s 17ms/step - loss: 0.3005 - accuracy: 0.8990 - val_loss: 1.3492 - val_accuracy: 0.5200 Epoch 136/300 5/5 [==============================] - 0s 16ms/step - loss: 0.2757 - accuracy: 0.9192 - val_loss: 1.3810 - val_accuracy: 0.5200 Epoch 137/300 5/5 [==============================] - 0s 31ms/step - loss: 0.1777 - accuracy: 0.9697 - val_loss: 1.4349 - val_accuracy: 0.5200 Epoch 138/300 5/5 [==============================] - 0s 15ms/step - loss: 0.2364 - accuracy: 0.9596 - val_loss: 1.4826 - val_accuracy: 0.5200 Epoch 139/300 5/5 [==============================] - 0s 16ms/step - loss: 0.1990 - accuracy: 0.9798 - val_loss: 1.5212 - val_accuracy: 0.5200 Epoch 140/300 5/5 [==============================] - 0s 11ms/step - loss: 0.2061 - accuracy: 0.9293 - val_loss: 1.5476 - val_accuracy: 0.5200 Epoch 141/300 5/5 [==============================] - 0s 11ms/step - loss: 0.2731 - accuracy: 0.9192 - val_loss: 1.5597 - val_accuracy: 0.4800 Epoch 142/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2449 - accuracy: 0.9394 - val_loss: 1.5239 - val_accuracy: 0.4800 Epoch 143/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1948 - accuracy: 0.9596 - val_loss: 1.5008 - val_accuracy: 0.4800 Epoch 144/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1962 - accuracy: 0.9596 - val_loss: 1.4953 - val_accuracy: 0.4800 Epoch 145/300 5/5 [==============================] - 0s 9ms/step - loss: 0.2167 - accuracy: 0.9192 - val_loss: 1.4841 - val_accuracy: 0.4800 Epoch 146/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2214 - accuracy: 0.9495 - val_loss: 1.4630 - val_accuracy: 0.4800 Epoch 147/300 5/5 [==============================] - 0s 9ms/step - loss: 0.2975 - accuracy: 0.9192 - val_loss: 1.4711 - val_accuracy: 0.4800 Epoch 148/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2330 - accuracy: 0.9495 - val_loss: 1.4720 - val_accuracy: 0.4800 Epoch 149/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2274 - accuracy: 0.9192 - val_loss: 1.4679 - val_accuracy: 0.4800 Epoch 150/300 5/5 [==============================] - 0s 9ms/step - loss: 0.1811 - accuracy: 0.9697 - val_loss: 1.4592 - val_accuracy: 0.4800 Epoch 151/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2102 - accuracy: 0.9596 - val_loss: 1.4627 - val_accuracy: 0.5200 Epoch 152/300 5/5 [==============================] - 0s 9ms/step - loss: 0.2380 - accuracy: 0.9495 - val_loss: 1.4665 - val_accuracy: 0.5200 Epoch 153/300 5/5 [==============================] - 0s 9ms/step - loss: 0.1704 - accuracy: 0.9596 - val_loss: 1.4623 - val_accuracy: 0.5200 Epoch 154/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1881 - accuracy: 0.9495 - val_loss: 1.4365 - val_accuracy: 0.5200 Epoch 155/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1582 - accuracy: 0.9697 - val_loss: 1.4168 - val_accuracy: 0.5200 Epoch 156/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1781 - accuracy: 0.9394 - val_loss: 1.4047 - val_accuracy: 0.5200 Epoch 157/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2442 - accuracy: 0.8990 - val_loss: 1.3924 - val_accuracy: 0.5200 Epoch 158/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2896 - accuracy: 0.9091 - val_loss: 1.3655 - val_accuracy: 0.5200 Epoch 159/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1905 - accuracy: 0.9596 - val_loss: 1.3695 - val_accuracy: 0.5200 Epoch 160/300 5/5 [==============================] - 0s 9ms/step - loss: 0.1716 - accuracy: 0.9495 - val_loss: 1.4077 - val_accuracy: 0.5200 Epoch 161/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1918 - accuracy: 0.9495 - val_loss: 1.4592 - val_accuracy: 0.5200 Epoch 162/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1977 - accuracy: 0.9495 - val_loss: 1.4918 - val_accuracy: 0.5200 Epoch 163/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1562 - accuracy: 0.9697 - val_loss: 1.4947 - val_accuracy: 0.5200 Epoch 164/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2337 - accuracy: 0.9394 - val_loss: 1.4922 - val_accuracy: 0.5200 Epoch 165/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2967 - accuracy: 0.8788 - val_loss: 1.4810 - val_accuracy: 0.5200 Epoch 166/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2716 - accuracy: 0.9091 - val_loss: 1.4867 - val_accuracy: 0.5200 Epoch 167/300 5/5 [==============================] - 0s 9ms/step - loss: 0.1742 - accuracy: 0.9697 - val_loss: 1.4898 - val_accuracy: 0.5200 Epoch 168/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1361 - accuracy: 0.9798 - val_loss: 1.5008 - val_accuracy: 0.5200 Epoch 169/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1318 - accuracy: 0.9697 - val_loss: 1.4963 - val_accuracy: 0.5200 Epoch 170/300 5/5 [==============================] - 0s 9ms/step - loss: 0.2049 - accuracy: 0.9697 - val_loss: 1.5031 - val_accuracy: 0.5200 Epoch 171/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1784 - accuracy: 0.9596 - val_loss: 1.5263 - val_accuracy: 0.5200 Epoch 172/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1218 - accuracy: 0.9697 - val_loss: 1.5500 - val_accuracy: 0.5200 Epoch 173/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2701 - accuracy: 0.9091 - val_loss: 1.5800 - val_accuracy: 0.5200 Epoch 174/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1305 - accuracy: 0.9899 - val_loss: 1.5986 - val_accuracy: 0.5200 Epoch 175/300 5/5 [==============================] - 0s 9ms/step - loss: 0.1078 - accuracy: 0.9899 - val_loss: 1.5982 - val_accuracy: 0.4800 Epoch 176/300 5/5 [==============================] - 0s 31ms/step - loss: 0.1492 - accuracy: 0.9495 - val_loss: 1.5742 - val_accuracy: 0.4800 Epoch 177/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2203 - accuracy: 0.9293 - val_loss: 1.5666 - val_accuracy: 0.4800 Epoch 178/300 5/5 [==============================] - 0s 9ms/step - loss: 0.1498 - accuracy: 0.9596 - val_loss: 1.5672 - val_accuracy: 0.4800 Epoch 179/300 5/5 [==============================] - 0s 12ms/step - loss: 0.2119 - accuracy: 0.9394 - val_loss: 1.5702 - val_accuracy: 0.4800 Epoch 180/300 5/5 [==============================] - 0s 11ms/step - loss: 0.2052 - accuracy: 0.9394 - val_loss: 1.6045 - val_accuracy: 0.4800 Epoch 181/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1686 - accuracy: 0.9596 - val_loss: 1.6179 - val_accuracy: 0.4800 Epoch 182/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1190 - accuracy: 0.9899 - val_loss: 1.6063 - val_accuracy: 0.4800 Epoch 183/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1796 - accuracy: 0.9697 - val_loss: 1.6009 - val_accuracy: 0.4800 Epoch 184/300 5/5 [==============================] - 0s 11ms/step - loss: 0.2071 - accuracy: 0.9394 - val_loss: 1.6223 - val_accuracy: 0.4800 Epoch 185/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1907 - accuracy: 0.9495 - val_loss: 1.6199 - val_accuracy: 0.4800 Epoch 186/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1701 - accuracy: 0.9697 - val_loss: 1.5943 - val_accuracy: 0.4800 Epoch 187/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1242 - accuracy: 0.9798 - val_loss: 1.5534 - val_accuracy: 0.4800 Epoch 188/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1074 - accuracy: 0.9899 - val_loss: 1.5380 - val_accuracy: 0.4800 Epoch 189/300 5/5 [==============================] - 0s 9ms/step - loss: 0.1133 - accuracy: 0.9798 - val_loss: 1.5176 - val_accuracy: 0.4800 Epoch 190/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2135 - accuracy: 0.9192 - val_loss: 1.5239 - val_accuracy: 0.4800 Epoch 191/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1153 - accuracy: 1.0000 - val_loss: 1.5022 - val_accuracy: 0.4800 Epoch 192/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1657 - accuracy: 0.9697 - val_loss: 1.4831 - val_accuracy: 0.4800 Epoch 193/300 5/5 [==============================] - 0s 12ms/step - loss: 0.1709 - accuracy: 0.9596 - val_loss: 1.5013 - val_accuracy: 0.4800 Epoch 194/300 5/5 [==============================] - 0s 12ms/step - loss: 0.1047 - accuracy: 0.9697 - val_loss: 1.5316 - val_accuracy: 0.4800 Epoch 195/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1890 - accuracy: 0.9394 - val_loss: 1.5205 - val_accuracy: 0.4800 Epoch 196/300 5/5 [==============================] - 0s 15ms/step - loss: 0.1211 - accuracy: 0.9899 - val_loss: 1.4993 - val_accuracy: 0.4800 Epoch 197/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1964 - accuracy: 0.9697 - val_loss: 1.4950 - val_accuracy: 0.4800 Epoch 198/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1687 - accuracy: 0.9596 - val_loss: 1.4893 - val_accuracy: 0.4800 Epoch 199/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1903 - accuracy: 0.9495 - val_loss: 1.4987 - val_accuracy: 0.4800 Epoch 200/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1121 - accuracy: 0.9798 - val_loss: 1.5120 - val_accuracy: 0.5200 Epoch 201/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1858 - accuracy: 0.9293 - val_loss: 1.5310 - val_accuracy: 0.4800 Epoch 202/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1466 - accuracy: 0.9798 - val_loss: 1.5289 - val_accuracy: 0.4800 Epoch 203/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1250 - accuracy: 0.9697 - val_loss: 1.5239 - val_accuracy: 0.4800 Epoch 204/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1730 - accuracy: 0.9495 - val_loss: 1.4926 - val_accuracy: 0.4800 Epoch 205/300 5/5 [==============================] - 0s 10ms/step - loss: 0.0957 - accuracy: 0.9899 - val_loss: 1.4790 - val_accuracy: 0.4800 Epoch 206/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1037 - accuracy: 0.9899 - val_loss: 1.4629 - val_accuracy: 0.4800 Epoch 207/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1392 - accuracy: 0.9697 - val_loss: 1.4683 - val_accuracy: 0.5600 Epoch 208/300 5/5 [==============================] - 0s 12ms/step - loss: 0.2097 - accuracy: 0.9293 - val_loss: 1.4372 - val_accuracy: 0.5600 Epoch 209/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1829 - accuracy: 0.9293 - val_loss: 1.3519 - val_accuracy: 0.5600 Epoch 210/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1208 - accuracy: 0.9697 - val_loss: 1.3036 - val_accuracy: 0.5600 Epoch 211/300 5/5 [==============================] - 0s 11ms/step - loss: 0.2503 - accuracy: 0.9293 - val_loss: 1.2680 - val_accuracy: 0.5600 Epoch 212/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1507 - accuracy: 0.9596 - val_loss: 1.2557 - val_accuracy: 0.5600 Epoch 213/300 5/5 [==============================] - 0s 12ms/step - loss: 0.1033 - accuracy: 0.9798 - val_loss: 1.2492 - val_accuracy: 0.5600 Epoch 214/300 5/5 [==============================] - 0s 12ms/step - loss: 0.1370 - accuracy: 0.9596 - val_loss: 1.2528 - val_accuracy: 0.5600 Epoch 215/300 5/5 [==============================] - 0s 13ms/step - loss: 0.1637 - accuracy: 0.9495 - val_loss: 1.2701 - val_accuracy: 0.5600 Epoch 216/300 5/5 [==============================] - 0s 13ms/step - loss: 0.1563 - accuracy: 0.9495 - val_loss: 1.3010 - val_accuracy: 0.5200 Epoch 217/300 5/5 [==============================] - 0s 14ms/step - loss: 0.1341 - accuracy: 0.9596 - val_loss: 1.3261 - val_accuracy: 0.4800 Epoch 218/300 5/5 [==============================] - 0s 16ms/step - loss: 0.2497 - accuracy: 0.9697 - val_loss: 1.3175 - val_accuracy: 0.4800 Epoch 219/300 5/5 [==============================] - 0s 13ms/step - loss: 0.1408 - accuracy: 0.9697 - val_loss: 1.3323 - val_accuracy: 0.4800 Epoch 220/300 5/5 [==============================] - 0s 13ms/step - loss: 0.1871 - accuracy: 0.9293 - val_loss: 1.3988 - val_accuracy: 0.4800 Epoch 221/300 5/5 [==============================] - 0s 12ms/step - loss: 0.1606 - accuracy: 0.9394 - val_loss: 1.4870 - val_accuracy: 0.4800 Epoch 222/300 5/5 [==============================] - 0s 12ms/step - loss: 0.1436 - accuracy: 0.9394 - val_loss: 1.5700 - val_accuracy: 0.4800 Epoch 223/300 5/5 [==============================] - 0s 11ms/step - loss: 0.0884 - accuracy: 0.9899 - val_loss: 1.6129 - val_accuracy: 0.4800 Epoch 224/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1655 - accuracy: 0.9495 - val_loss: 1.6738 - val_accuracy: 0.4800 Epoch 225/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1821 - accuracy: 0.9596 - val_loss: 1.7443 - val_accuracy: 0.4800 Epoch 226/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1641 - accuracy: 0.9596 - val_loss: 1.7843 - val_accuracy: 0.4800 Epoch 227/300 5/5 [==============================] - 0s 12ms/step - loss: 0.1175 - accuracy: 0.9394 - val_loss: 1.8035 - val_accuracy: 0.4800 Epoch 228/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1767 - accuracy: 0.9495 - val_loss: 1.7560 - val_accuracy: 0.4800 Epoch 229/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1413 - accuracy: 0.9596 - val_loss: 1.7311 - val_accuracy: 0.4800 Epoch 230/300 5/5 [==============================] - 0s 10ms/step - loss: 0.0871 - accuracy: 0.9697 - val_loss: 1.6755 - val_accuracy: 0.4800 Epoch 231/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1414 - accuracy: 0.9293 - val_loss: 1.6216 - val_accuracy: 0.4800 Epoch 232/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1162 - accuracy: 0.9697 - val_loss: 1.5832 - val_accuracy: 0.4800 Epoch 233/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1137 - accuracy: 0.9798 - val_loss: 1.5216 - val_accuracy: 0.4800 Epoch 234/300 5/5 [==============================] - 0s 12ms/step - loss: 0.1481 - accuracy: 0.9394 - val_loss: 1.4967 - val_accuracy: 0.4800 Epoch 235/300 5/5 [==============================] - 0s 13ms/step - loss: 0.0805 - accuracy: 0.9899 - val_loss: 1.4890 - val_accuracy: 0.4800 Epoch 236/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1191 - accuracy: 0.9697 - val_loss: 1.4920 - val_accuracy: 0.4800 Epoch 237/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1142 - accuracy: 1.0000 - val_loss: 1.5058 - val_accuracy: 0.4800 Epoch 238/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1674 - accuracy: 0.9192 - val_loss: 1.4954 - val_accuracy: 0.5200 Epoch 239/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1246 - accuracy: 0.9596 - val_loss: 1.4776 - val_accuracy: 0.5200 Epoch 240/300 5/5 [==============================] - 0s 11ms/step - loss: 0.0915 - accuracy: 0.9899 - val_loss: 1.4703 - val_accuracy: 0.5200 Epoch 241/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1080 - accuracy: 0.9697 - val_loss: 1.4913 - val_accuracy: 0.5200 Epoch 242/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1976 - accuracy: 0.9798 - val_loss: 1.5185 - val_accuracy: 0.5200 Epoch 243/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1357 - accuracy: 0.9596 - val_loss: 1.5415 - val_accuracy: 0.5200 Epoch 244/300 5/5 [==============================] - 0s 11ms/step - loss: 0.2367 - accuracy: 0.9495 - val_loss: 1.6021 - val_accuracy: 0.5600 Epoch 245/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1714 - accuracy: 0.9192 - val_loss: 1.6069 - val_accuracy: 0.5600 Epoch 246/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1384 - accuracy: 0.9394 - val_loss: 1.5733 - val_accuracy: 0.5600 Epoch 247/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1623 - accuracy: 0.9697 - val_loss: 1.5718 - val_accuracy: 0.5200 Epoch 248/300 5/5 [==============================] - 0s 10ms/step - loss: 0.0526 - accuracy: 0.9899 - val_loss: 1.5915 - val_accuracy: 0.4800 Epoch 249/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2460 - accuracy: 0.9495 - val_loss: 1.6528 - val_accuracy: 0.4800 Epoch 250/300 5/5 [==============================] - 0s 11ms/step - loss: 0.0818 - accuracy: 0.9798 - val_loss: 1.7130 - val_accuracy: 0.4800 Epoch 251/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1450 - accuracy: 0.9697 - val_loss: 1.7354 - val_accuracy: 0.4800 Epoch 252/300 5/5 [==============================] - 0s 10ms/step - loss: 0.0954 - accuracy: 0.9798 - val_loss: 1.7183 - val_accuracy: 0.4800 Epoch 253/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1407 - accuracy: 0.9495 - val_loss: 1.6969 - val_accuracy: 0.4800 Epoch 254/300 5/5 [==============================] - 0s 11ms/step - loss: 0.0943 - accuracy: 0.9899 - val_loss: 1.6876 - val_accuracy: 0.4800 Epoch 255/300 5/5 [==============================] - 0s 11ms/step - loss: 0.0840 - accuracy: 0.9798 - val_loss: 1.6636 - val_accuracy: 0.4800 Epoch 256/300 5/5 [==============================] - 0s 12ms/step - loss: 0.1189 - accuracy: 0.9697 - val_loss: 1.6280 - val_accuracy: 0.4800 Epoch 257/300 5/5 [==============================] - 0s 12ms/step - loss: 0.1279 - accuracy: 0.9798 - val_loss: 1.6123 - val_accuracy: 0.4800 Epoch 258/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1504 - accuracy: 0.9596 - val_loss: 1.6306 - val_accuracy: 0.4800 Epoch 259/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1448 - accuracy: 0.9596 - val_loss: 1.6611 - val_accuracy: 0.4800 Epoch 260/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1440 - accuracy: 0.9697 - val_loss: 1.6805 - val_accuracy: 0.4800 Epoch 261/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1078 - accuracy: 0.9798 - val_loss: 1.7369 - val_accuracy: 0.4800 Epoch 262/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1182 - accuracy: 0.9596 - val_loss: 1.7528 - val_accuracy: 0.4800 Epoch 263/300 5/5 [==============================] - 0s 10ms/step - loss: 0.0829 - accuracy: 0.9899 - val_loss: 1.7370 - val_accuracy: 0.4800 Epoch 264/300 5/5 [==============================] - 0s 11ms/step - loss: 0.0961 - accuracy: 0.9596 - val_loss: 1.7634 - val_accuracy: 0.4800 Epoch 265/300 5/5 [==============================] - 0s 10ms/step - loss: 0.0706 - accuracy: 1.0000 - val_loss: 1.7950 - val_accuracy: 0.4800 Epoch 266/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1538 - accuracy: 0.9091 - val_loss: 1.8190 - val_accuracy: 0.4800 Epoch 267/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1197 - accuracy: 0.9697 - val_loss: 1.8417 - val_accuracy: 0.4800 Epoch 268/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1511 - accuracy: 0.9596 - val_loss: 1.8149 - val_accuracy: 0.4800 Epoch 269/300 5/5 [==============================] - 0s 11ms/step - loss: 0.0665 - accuracy: 0.9899 - val_loss: 1.7783 - val_accuracy: 0.4800 Epoch 270/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1102 - accuracy: 0.9697 - val_loss: 1.7778 - val_accuracy: 0.4800 Epoch 271/300 5/5 [==============================] - 0s 10ms/step - loss: 0.0443 - accuracy: 1.0000 - val_loss: 1.7662 - val_accuracy: 0.4800 Epoch 272/300 5/5 [==============================] - 0s 9ms/step - loss: 0.1157 - accuracy: 0.9697 - val_loss: 1.7285 - val_accuracy: 0.5200 Epoch 273/300 5/5 [==============================] - 0s 10ms/step - loss: 0.0833 - accuracy: 0.9798 - val_loss: 1.5992 - val_accuracy: 0.5200 Epoch 274/300 5/5 [==============================] - 0s 11ms/step - loss: 0.0763 - accuracy: 1.0000 - val_loss: 1.4706 - val_accuracy: 0.5200 Epoch 275/300 5/5 [==============================] - 0s 10ms/step - loss: 0.2336 - accuracy: 0.9091 - val_loss: 1.3813 - val_accuracy: 0.5200 Epoch 276/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1367 - accuracy: 0.9697 - val_loss: 1.3966 - val_accuracy: 0.4800 Epoch 277/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1156 - accuracy: 0.9798 - val_loss: 1.4031 - val_accuracy: 0.5200 Epoch 278/300 5/5 [==============================] - 0s 9ms/step - loss: 0.0500 - accuracy: 0.9899 - val_loss: 1.4059 - val_accuracy: 0.5200 Epoch 279/300 5/5 [==============================] - 0s 10ms/step - loss: 0.0539 - accuracy: 1.0000 - val_loss: 1.4113 - val_accuracy: 0.4800 Epoch 280/300 5/5 [==============================] - 0s 12ms/step - loss: 0.1042 - accuracy: 0.9798 - val_loss: 1.4522 - val_accuracy: 0.5200 Epoch 281/300 5/5 [==============================] - 0s 13ms/step - loss: 0.1352 - accuracy: 0.9495 - val_loss: 1.5379 - val_accuracy: 0.5200 Epoch 282/300 5/5 [==============================] - 0s 11ms/step - loss: 0.0573 - accuracy: 0.9899 - val_loss: 1.6033 - val_accuracy: 0.5200 Epoch 283/300 5/5 [==============================] - 0s 11ms/step - loss: 0.0787 - accuracy: 0.9798 - val_loss: 1.6581 - val_accuracy: 0.5200 Epoch 284/300 5/5 [==============================] - 0s 11ms/step - loss: 0.0795 - accuracy: 0.9899 - val_loss: 1.6786 - val_accuracy: 0.5200 Epoch 285/300 5/5 [==============================] - 0s 10ms/step - loss: 0.0714 - accuracy: 0.9798 - val_loss: 1.6966 - val_accuracy: 0.5200 Epoch 286/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1454 - accuracy: 0.9394 - val_loss: 1.7467 - val_accuracy: 0.5200 Epoch 287/300 5/5 [==============================] - 0s 33ms/step - loss: 0.0836 - accuracy: 0.9798 - val_loss: 1.8209 - val_accuracy: 0.5200 Epoch 288/300 5/5 [==============================] - 0s 11ms/step - loss: 0.0656 - accuracy: 0.9899 - val_loss: 1.8699 - val_accuracy: 0.4800 Epoch 289/300 5/5 [==============================] - 0s 12ms/step - loss: 0.2126 - accuracy: 0.9293 - val_loss: 1.9250 - val_accuracy: 0.4800 Epoch 290/300 5/5 [==============================] - 0s 12ms/step - loss: 0.0797 - accuracy: 0.9798 - val_loss: 1.9840 - val_accuracy: 0.4800 Epoch 291/300 5/5 [==============================] - 0s 12ms/step - loss: 0.0938 - accuracy: 0.9899 - val_loss: 2.0202 - val_accuracy: 0.4800 Epoch 292/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1023 - accuracy: 0.9495 - val_loss: 1.9804 - val_accuracy: 0.4800 Epoch 293/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1322 - accuracy: 0.9596 - val_loss: 1.9602 - val_accuracy: 0.4800 Epoch 294/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1004 - accuracy: 0.9697 - val_loss: 1.9563 - val_accuracy: 0.5200 Epoch 295/300 5/5 [==============================] - 0s 10ms/step - loss: 0.0808 - accuracy: 0.9798 - val_loss: 1.9499 - val_accuracy: 0.5200 Epoch 296/300 5/5 [==============================] - 0s 11ms/step - loss: 0.1155 - accuracy: 0.9495 - val_loss: 1.9419 - val_accuracy: 0.5200 Epoch 297/300 5/5 [==============================] - 0s 11ms/step - loss: 0.0987 - accuracy: 0.9596 - val_loss: 1.9685 - val_accuracy: 0.5200 Epoch 298/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1021 - accuracy: 0.9697 - val_loss: 1.9752 - val_accuracy: 0.4800 Epoch 299/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1520 - accuracy: 0.9495 - val_loss: 1.9830 - val_accuracy: 0.4800 Epoch 300/300 5/5 [==============================] - 0s 10ms/step - loss: 0.1005 - accuracy: 0.9798 - val_loss: 2.0018 - val_accuracy: 0.4800 CPU times: user 20.7 s, sys: 3.82 s, total: 24.5 s Wall time: 17.3 s

That's it! After some quick exploration of the learning success:

import plotly.graph_objects as go

epoch = np.arange(300) + 1

fig = go.Figure()

# Add traces

fig.add_trace(go.Scatter(x=epoch, y=fit.history['accuracy'],

mode='lines+markers',

name='training set'))

fig.add_trace(go.Scatter(x=epoch, y=fit.history['val_accuracy'],

mode='lines+markers',

name='validation set'))

fig.update_layout(title="Accuracy in training and validation set",

template='plotly_white')

fig.update_xaxes(title_text='Epoch')

fig.update_yaxes(title_text='Accuracy')

fig.show()

#plot(fig, filename = 'acc_eyes.html')