Models, AI and all other buzz words¶

Peer Herholz (he/him)

Postdoctoral researcher - NeuroDataScience lab at MNI/McGill, UNIQUE

Member - BIDS, ReproNim, Brainhack, Neuromod, OHBM SEA-SIG

![]()

![]() @peerherholz

@peerherholz

A quick poll…¶

Before we start, let’s do a quick poll that will help to start discussions and bring the point of this lecture and workshop across. We will do this in a live and interactive manner via mentimeter. Thus, please head over to www.mentimeter.com and use the following codes on the top of the page to get to the polls. Important: all answers will be anonymously, so don’t worry.

Question 1¶

Please use the code 62447080.

from IPython.display import IFrame

IFrame(src='https://www.mentimeter.com/s/3e455379770da0beb369c8ddf412aa08/33e28848548e', width=700, height=400)

Question 2¶

Please use the code 2893975.

from IPython.display import IFrame

IFrame(src='https://www.mentimeter.com/s/feb3310ac9a14b5ac89854b3e9bed51b/fac70d5f626b', width=700, height=400)

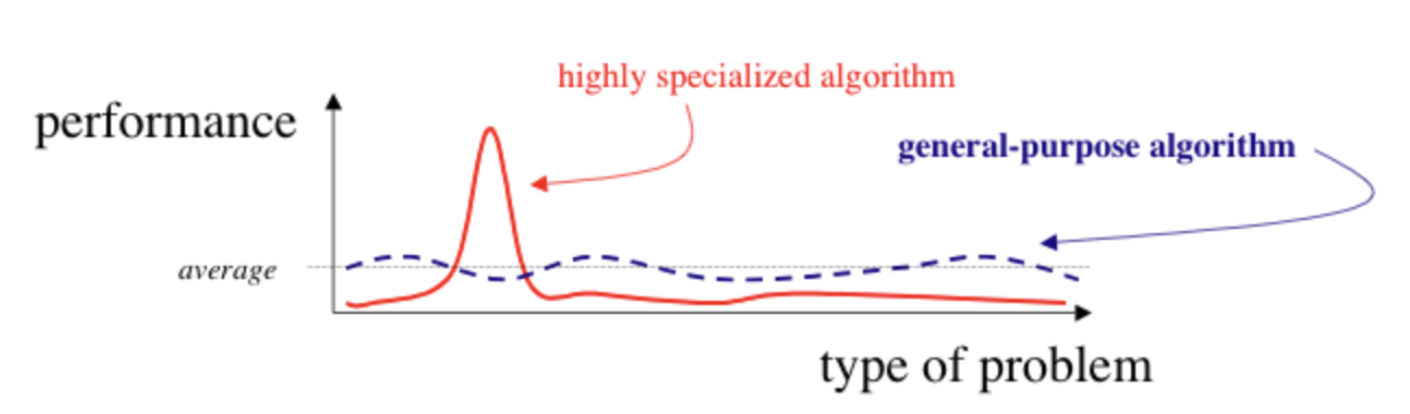

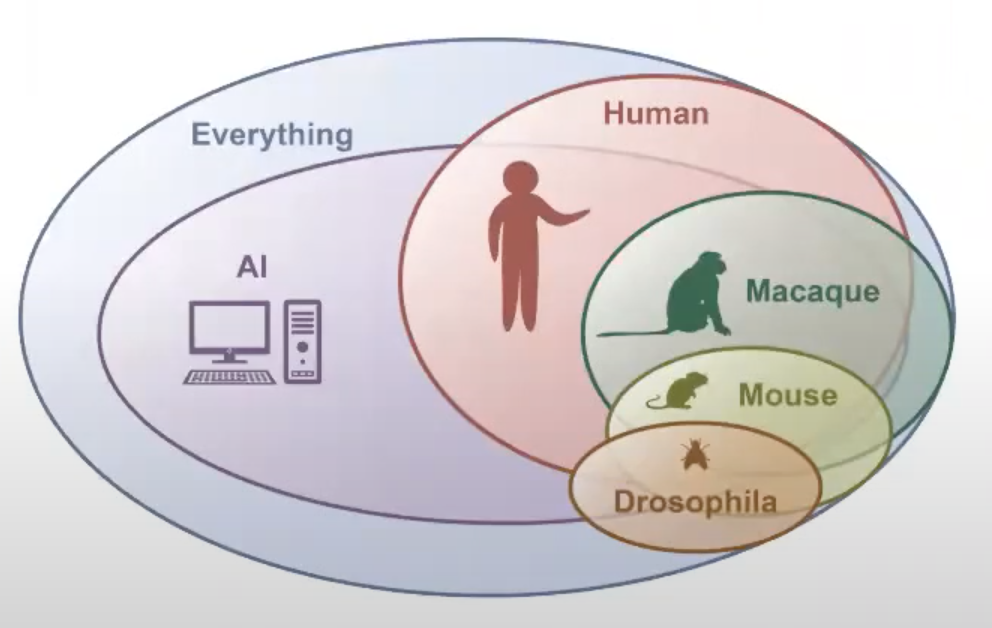

Quite often the discussion about AI centers around general artificial intelligence which might be a bit of an ill-posed problem as we don’t have any reason that “true” general artificial intelligence can exist. The focus here is on "general".

https://cdn-images-1.medium.com/fit/t/1600/480/1*rdVotAISnffB6aTzeiETHQ.png

However, one thing we know that appears to be fairly general purpose is a biological brain . On the other hand it really is not as it might be applicable to many domains, but is heavily focused on a few tasks within the world it is surrounded by. This is also the idea what we should do with AI and referred to as the AI set.

The AI set

A set of tasks animals are good at and perhaps some they are not good at due to physical limitations. These functions are some AI should learn and be capable of accomplishing.

this is exactly why we’re here…

Aim(s) of this section¶

get to know the “lingo” and basic vocabulary

define important terms

situate core workflow aspects

Outline for this section¶

The definitions

The fellowship of core aspects

The two (or more) parts of each aspect

The return of the complexity

Yes, it’s that “easy”

The definitions¶

Artificial intelligence (AI) is intelligence demonstrated by machines, as opposed to the natural intelligence displayed by humans or animals. Leading AI textbooks define the field as the study of “intelligent agents”: any system that perceives its environment and takes actions that maximize its chance of achieving its goals. Some popular accounts use the term “artificial intelligence” to describe machines that mimic “cognitive” functions that humans associate with the human mind, such as “learning” and “problem solving”, however this definition is rejected by major AI researchers.

https://en.wikipedia.org/wiki/Artificial_intelligence

Machine learning (ML) is the study of computer algorithms that can improve automatically through experience and by the use of data. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as “training data”, in order to make predictions or decisions without being explicitly programmed to do so. A subset of machine learning is closely related to computational statistics, which focuses on making predictions using computers; but not all machine learning is statistical learning. The study of mathematical optimization delivers methods, theory and application domains to the field of machine learning. Data mining is a related field of study, focusing on exploratory data analysis through unsupervised learning. Some implementations of machine learning use data and neural networks in a way that mimics the working of a biological brain.

https://en.wikipedia.org/wiki/Machine_learning

Deep learning (also known as deep structured learning) is part of a broader family of machine learning methods based on artificial neural networks with representation learning. Learning can be supervised, semi-supervised or unsupervised. Artificial neural networks (ANNs) were inspired by information processing and distributed communication nodes in biological systems. ANNs have various differences from biological brains. Specifically, neural networks tend to be static and symbolic, while the biological brain of most living organisms is dynamic (plastic) and analogue. The adjective “deep” in deep learning refers to the use of multiple layers in the network. Early work showed that a linear perceptron cannot be a universal classifier, but that a network with a nonpolynomial activation function with one hidden layer of unbounded width can.

The fellowship of core aspects¶

Term |

Definition |

|---|---|

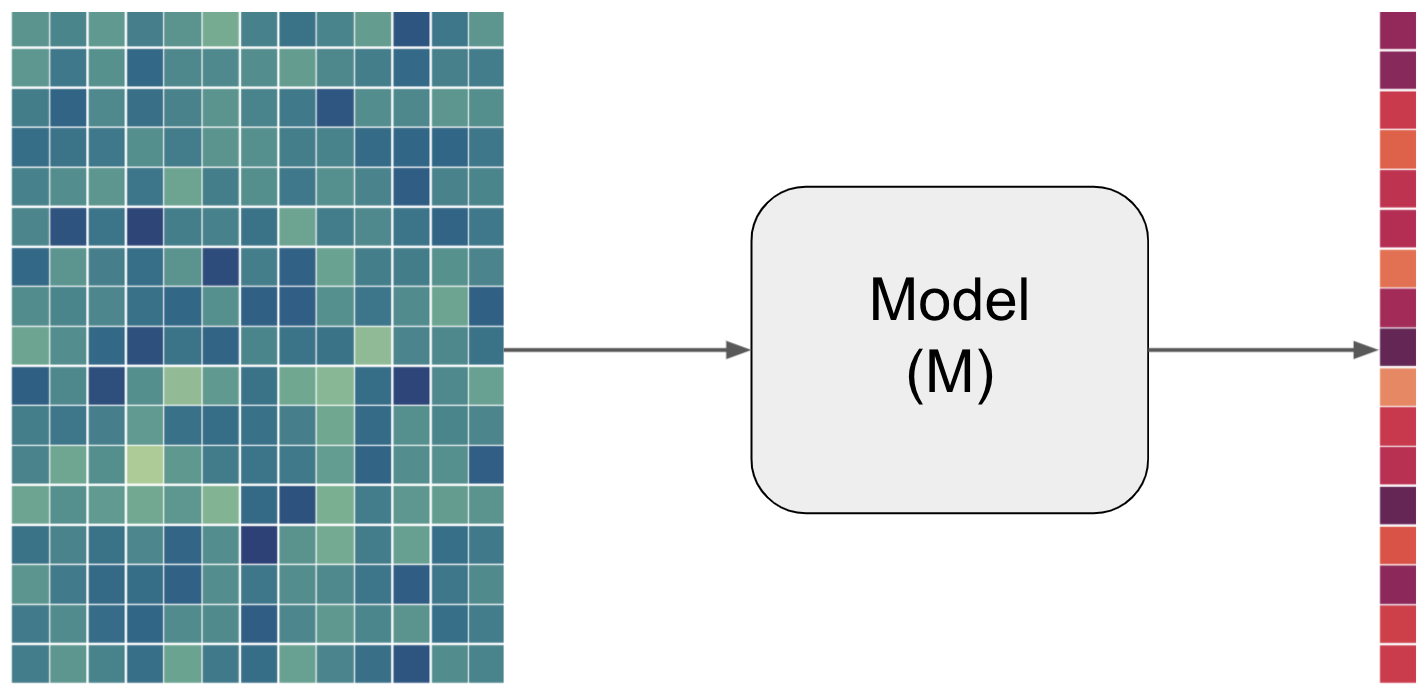

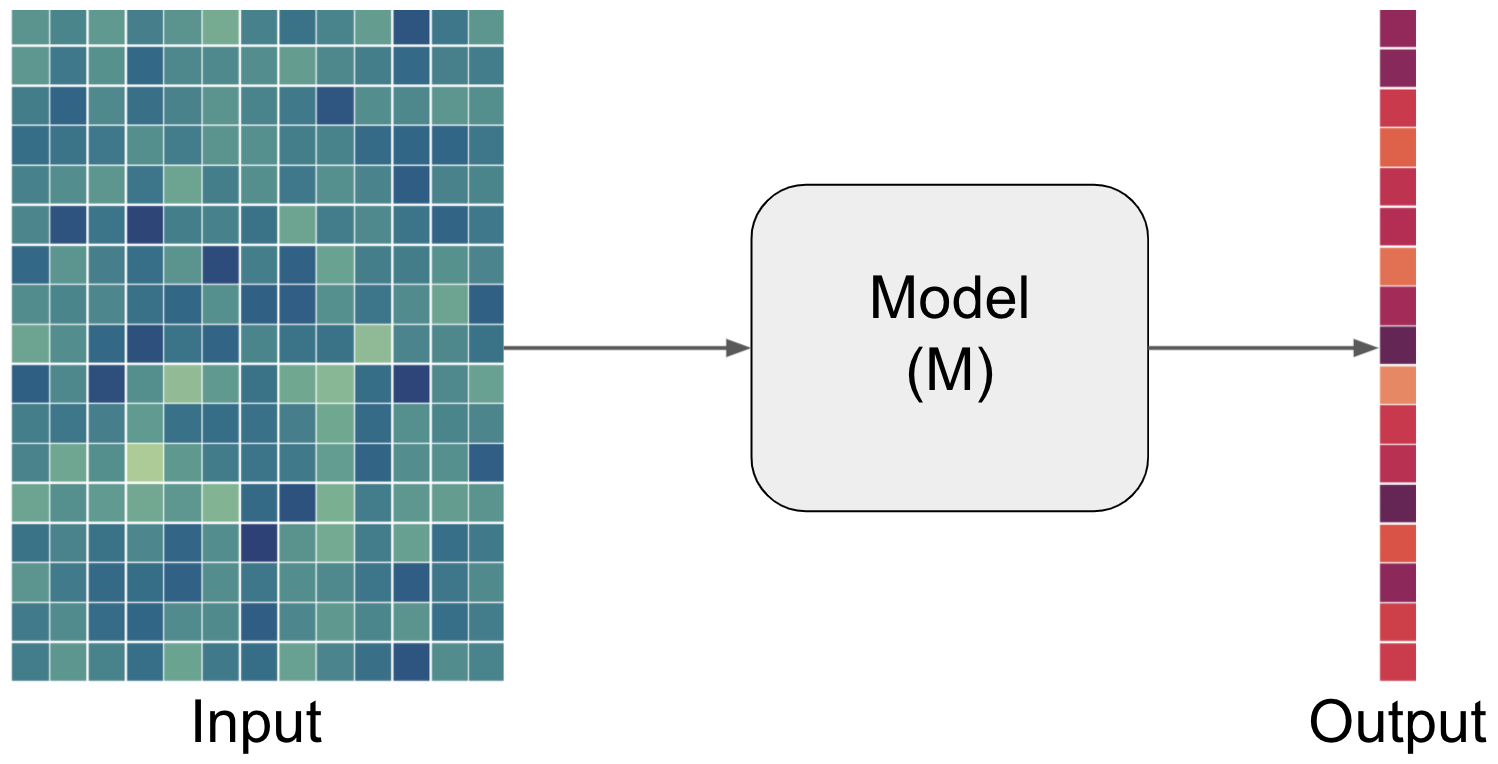

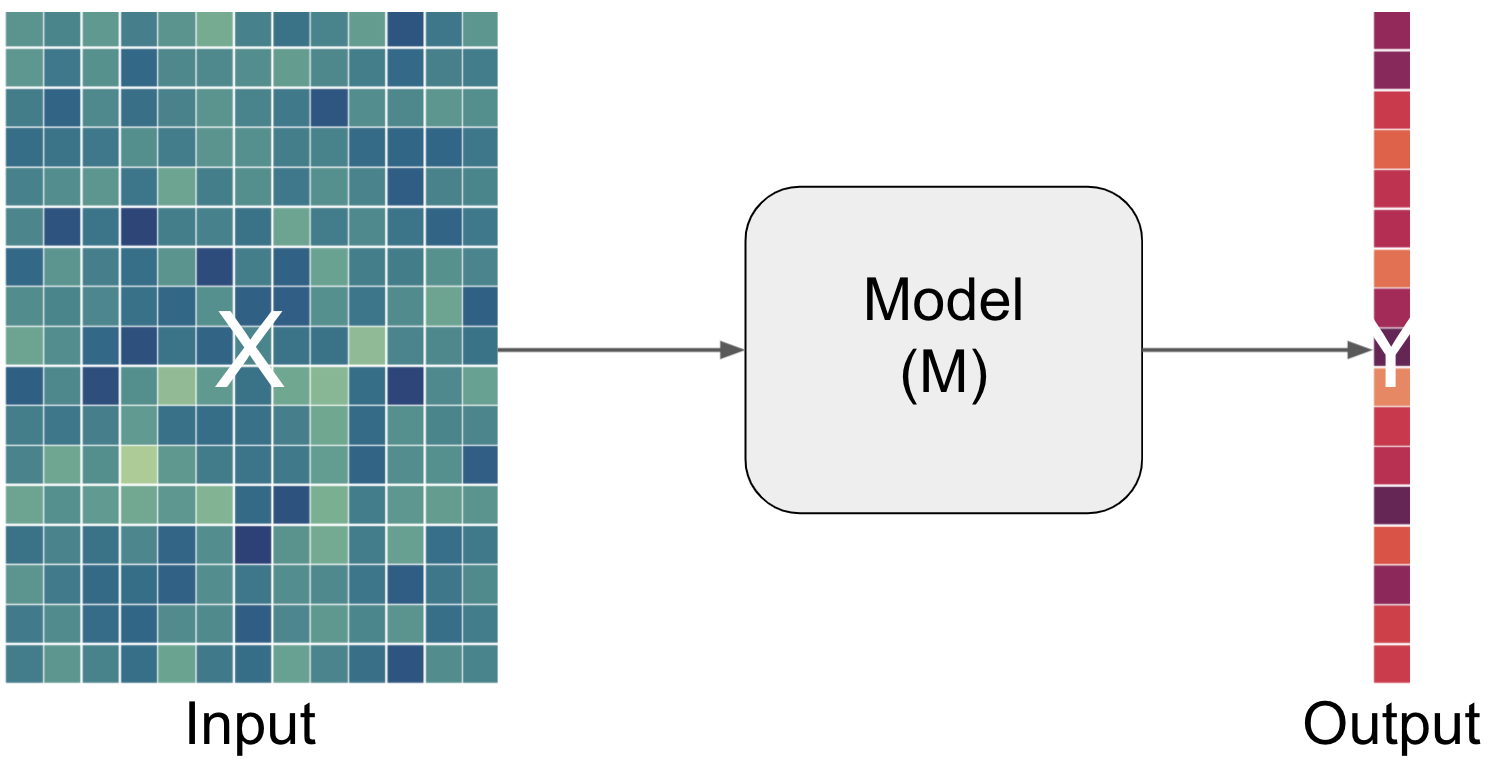

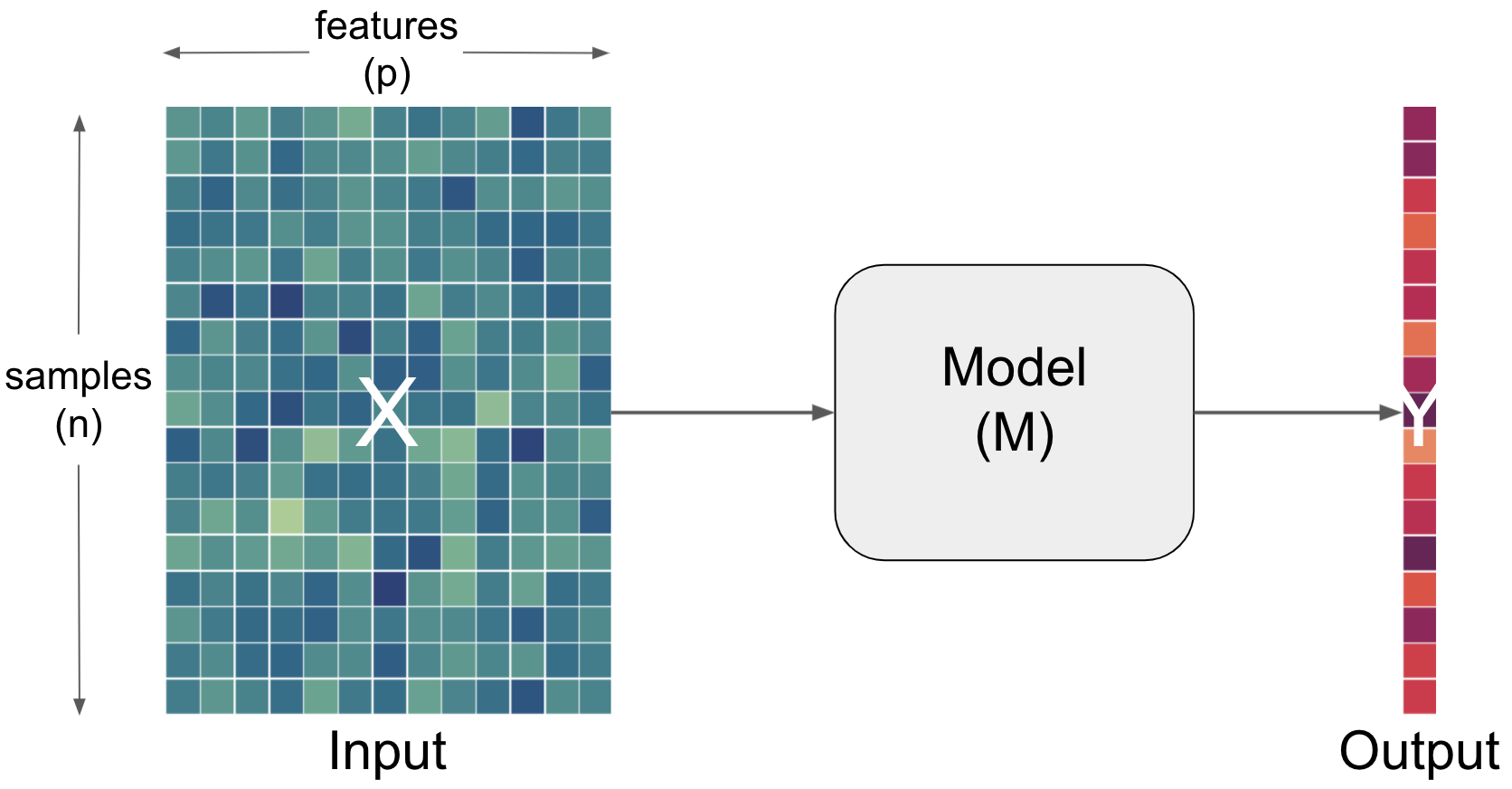

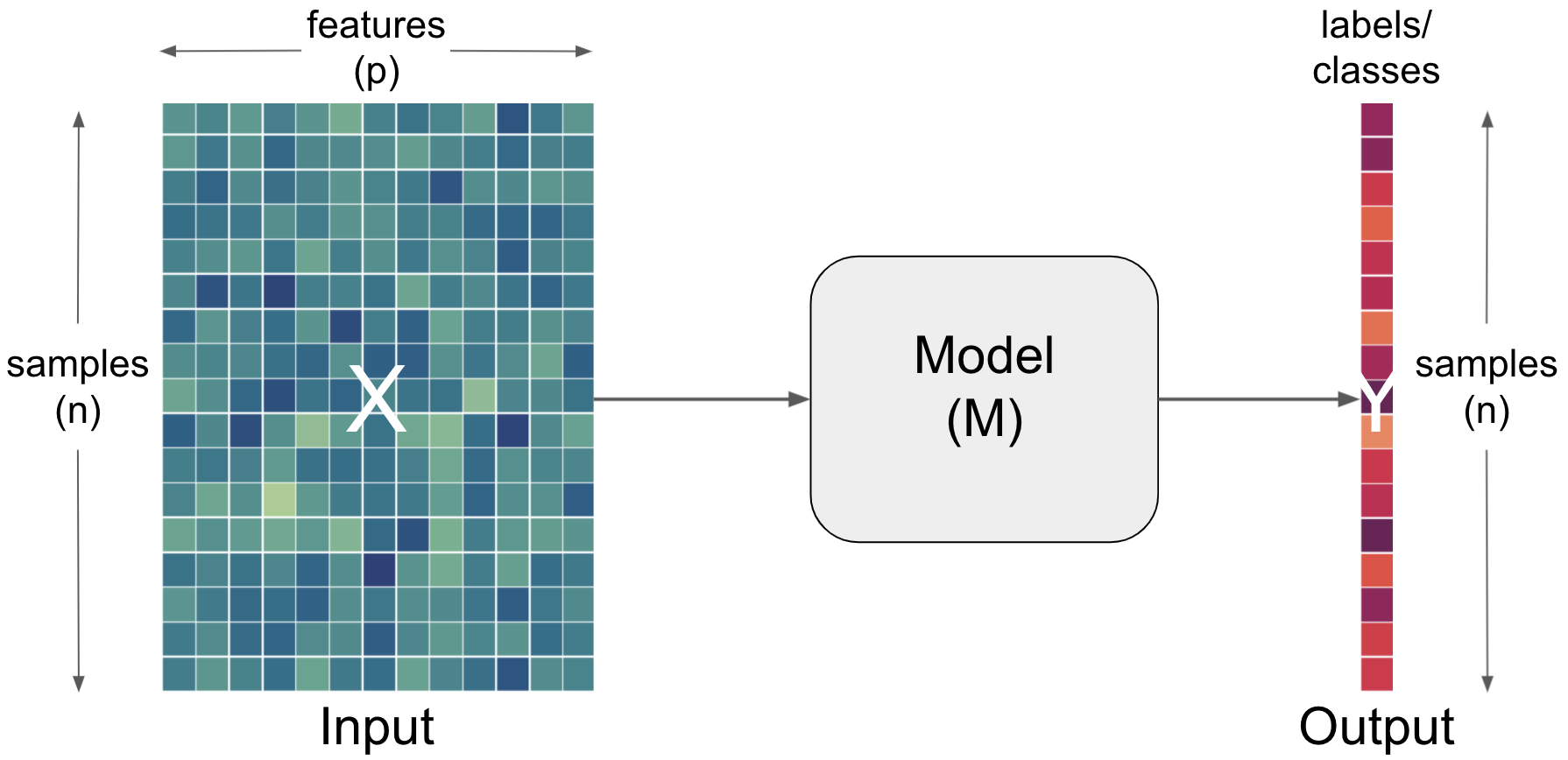

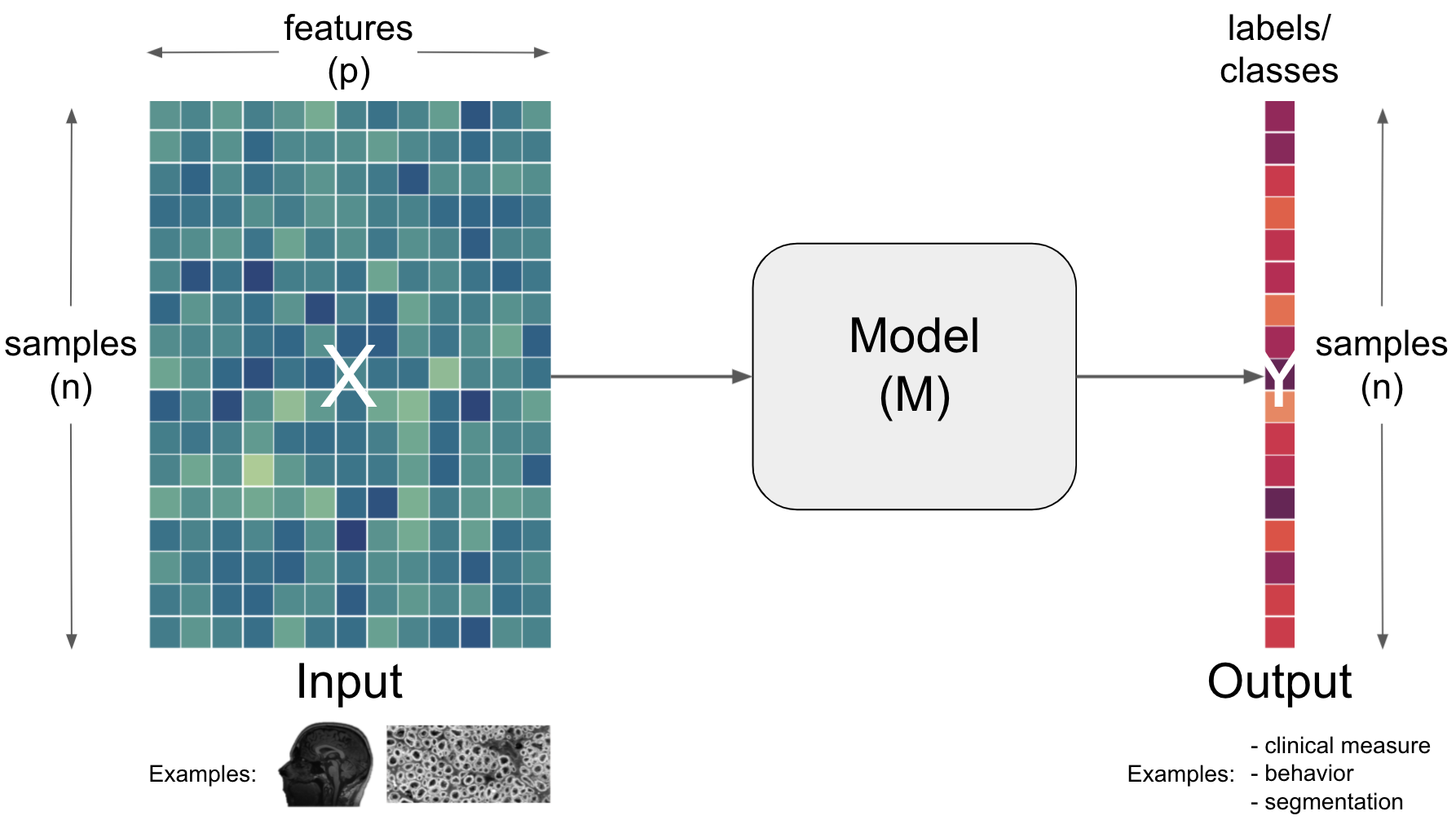

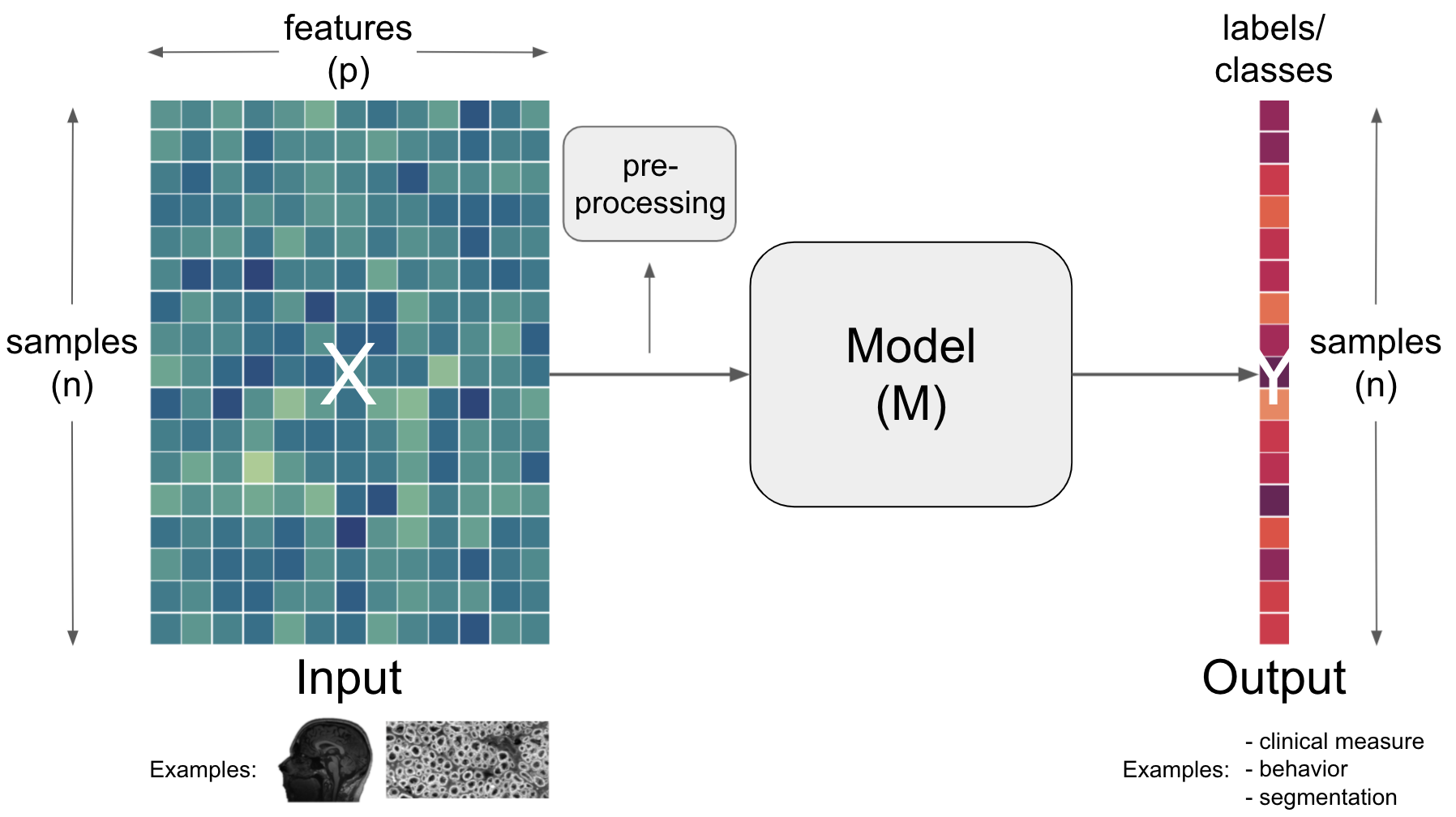

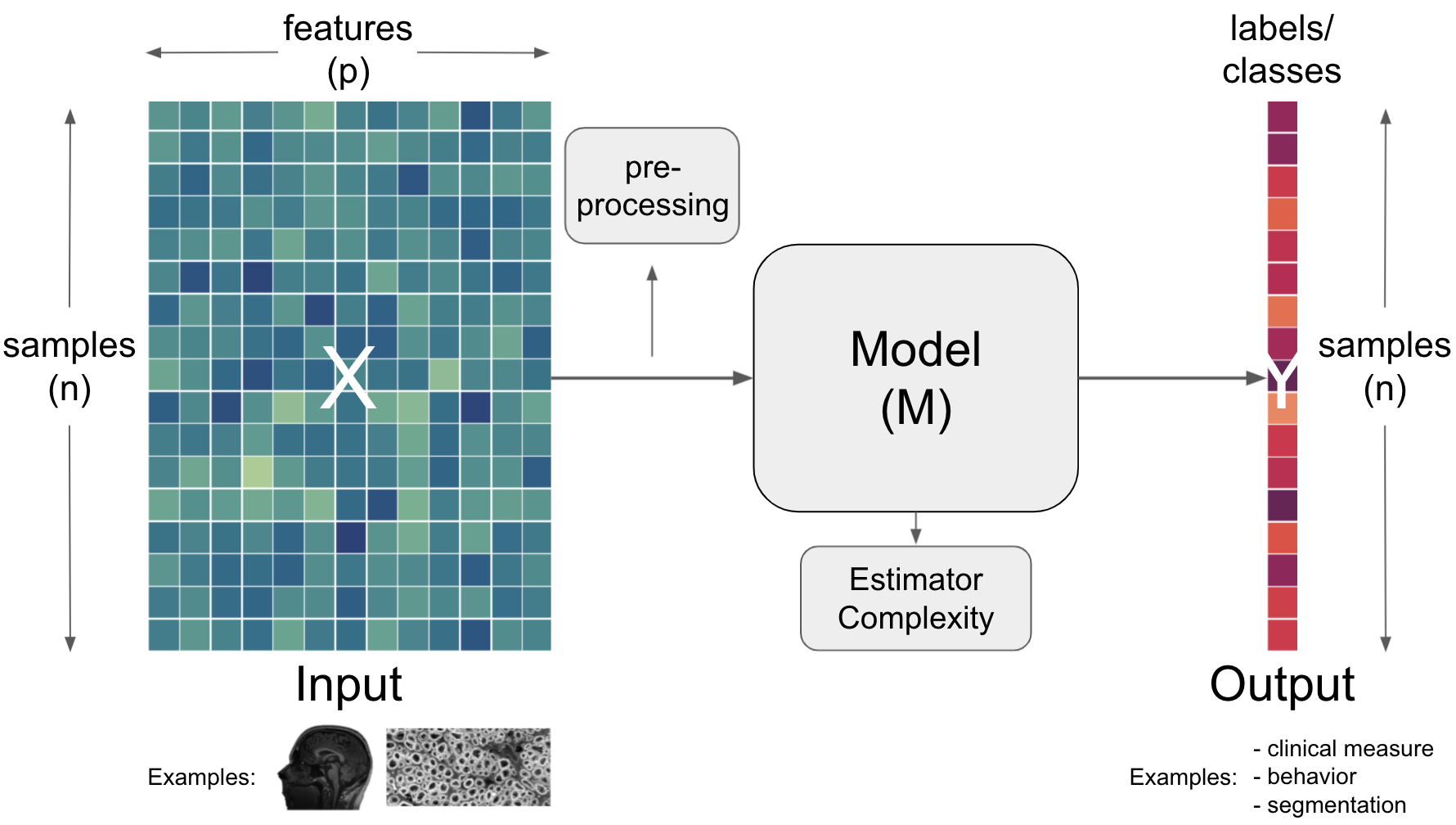

Model |

A set of parameters that makes a prediction based on a given input. The parameter values are fitted to available data. |

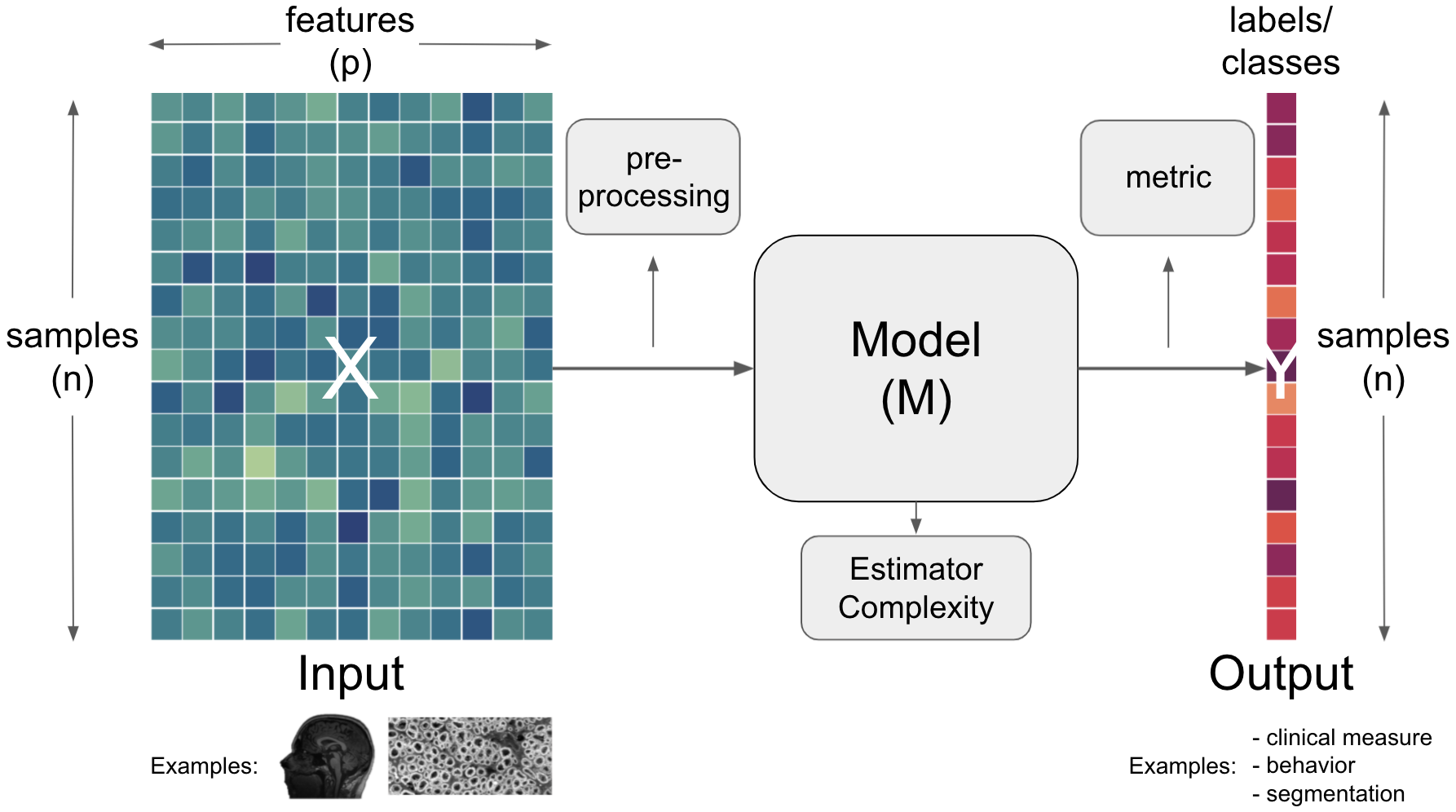

The two (or more) parts of each aspect¶

Term |

Definition |

|---|---|

Input |

Data from which we would like to make predictions. For example, results from lab tests (e.g. hematocrit, protein concentrations, response time) to inform a diagnosis (e.g. anemia, Alzheimer’s, Muliple Sclerosis). Data is typically multidimensional, with each sample having multiple values that we think might inform our predictions. Conventionally, the dimensions of data are [number of subjects] x [number of features] |

Feature |

One dimension of the input data which corresponds to a particular measure. When describing a person, ‘height’, ‘weight’, ‘hair colour’ could be considered as features. |

Term |

Definition |

|---|---|

Labels |

True values corresponding to a data sample that we would like to accurately predict given. Also known as ‘target’ |

Prediction |

The output of a model for a given sample. In the ideal case, the prediction should be the sample’s label. |

The return of the complexity¶

Term |

Definition |

|---|---|

Preprocessing |

Change the distribution of the input (raw feature vectors) to make it more suitable for models, e.g. via standardization, scaling or transforming. |

Term |

Definition |

|---|---|

Estimator |

An instance of a model in |

Complexity |

Refers to the number of parameters in a model. A model with 10 parameters is said to be more complex than a model with 3. |

Term |

Definition |

|---|---|

Metric |

A function that defines how good a prediction is. |

Sounds like a lot, eh? Let’s see how these things look like in action…

Yes, it’s that “easy”¶

Let’s imagine we want to use the functional connectivity between brain regions to predict the age of human participants. Using python and a few of its fantastic packages, here pandas and sklearn, we can make this analysis happen in no time.

At first we get our data:

import urllib.request

url = 'https://www.dropbox.com/s/u3vp2ghrsmkw5po/MAIN2019_BASC064_subsamp_features.npz?dl=1'

urllib.request.urlretrieve(url, 'MAIN2019_BASC064_subsamp_features.npz')

('MAIN2019_BASC064_subsamp_features.npz',

<http.client.HTTPMessage at 0x7f87f1e97730>)

Next, we load our input and inspect it:

import numpy as np

data = np.load('MAIN2019_BASC064_subsamp_features.npz')['a']

data.shape

(155, 2016)

We can also use plotly to easily visualize our input data in an interactive manner:

import plotly.express as px

from IPython.core.display import display, HTML

from plotly.offline import init_notebook_mode, plot

fig = px.imshow(data, labels=dict(x="features", y="participants"), height=800, aspect='None')

fig.update(layout_coloraxis_showscale=False)

init_notebook_mode(connected=True)

#fig.show()

plot(fig, filename = 'input_data.html')

display(HTML('input_data.html'))

Beside the input data we also need our labels:

url = 'https://www.dropbox.com/s/ofsqdcukyde4lke/participants.csv?dl=1'

urllib.request.urlretrieve(url, 'participants.csv')

('participants.csv', <http.client.HTTPMessage at 0x7f87f3762af0>)

Which we can easily load and check via pandas:

import pandas as pd

labels = pd.read_csv('participants.csv')['AgeGroup']

labels.describe()

count 155

unique 6

top 5yo

freq 34

Name: AgeGroup, dtype: object

For a better intuition, we’re going to also visualize the labels and their distribution:

fig = px.histogram(labels, marginal='box', template='plotly_white')

fig.update_layout(showlegend=False, width=800, height=600)

init_notebook_mode(connected=True)

#fig.show()

plot(fig, filename = 'labels.html')

display(HTML('labels.html'))

And we’re ready to create our machine learning analysis pipeline using sklearn within we will scale our input data, train a Support Vector Machine and test its predictive performance. We import the required functions and classes:

from sklearn.preprocessing import StandardScaler

from sklearn.svm import SVC

from sklearn.pipeline import make_pipeline

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

and setup a sklearn pipeline:

pipe = make_pipeline(

StandardScaler(),

SVC()

)

After dividing our input and labels into training and test sets:

X_train, X_test, y_train, y_test = train_test_split(data, labels, random_state=0)

we can already fit our machine learning analysis pipeline to our data:

pipe.fit(X_train, y_train)

Pipeline(steps=[('standardscaler', StandardScaler()), ('svc', SVC())])

and test its predictive performance:

print('accuracy is %s with chance level being %s'

%(accuracy_score(pipe.predict(X_test), y_test), 1/len(pd.unique(labels))))

accuracy is 0.46153846153846156 with chance level being 0.16666666666666666

but wait…there’s much more to talk about here

You lied!

I’m sorry.

The truth is: while it’s very easy (maybe too easy?) to setup and run machine learning analyses, doing it right is definitely not (like a lot of other complex analyses)… There are so many aspects to think about and so many things one can vary that the garden of forking paths becomes tremendously large. Thus, we will spent the next few hours to go through central concepts and components of these analyses, first for “classic” machine learning and then for deep learning.

One aspect that is part of every model is the fitting, i.e. the learning of model weights (machine learning much?. Thus, we need to go through some things there as well…

Model fitting¶

when talking about

model fitting, we need to talk about three central aspects:the model

the loss function

the optimization

Term |

Definition |

|---|---|

Model |

A set of parameters that makes a prediction based on a given input. The parameter values are fitted to available data. |

Loss function |

A function that evaluates how well your algorithm models your dataset |

Optimization |

A function that tries to minimize the loss via updating model parameters. |

An example: linear regression¶

Model: $\(y=\beta_{0}+\beta_{1} x_{1}^{2}+\beta_{2} x_{2}^{2}\)$

Loss function: $\( M S E=\frac{1}{n} \sum_{i=1}^{n}\left(y_{i}-\hat{y}_{i}\right)^{2}\)$

optimization: Gradient descent

Gradient descentwith asingle input variableandn samplesStart with random weights (

β0andβ1) $\(\hat{y}_{i}=\beta_{0}+\beta_{1} X_{i}\)$Compute loss (i.e.

MSE) $\(M S E=\frac{1}{n} \sum_{i=1}^{n}\left(y_{i}-\hat{y}_{i}\right)^{2}\)$Update

weightsbased on thegradient

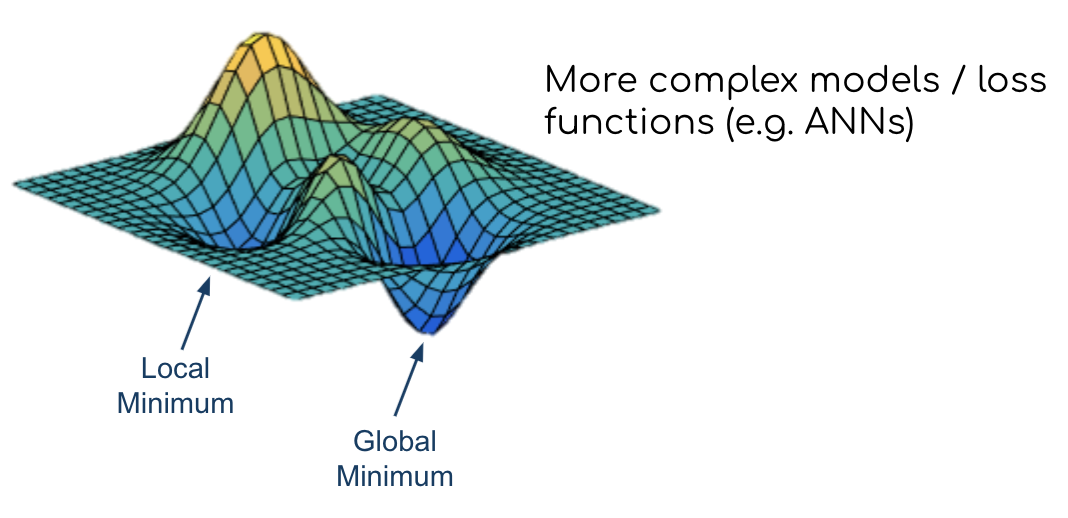

Gradient descentfor complex models withnon-convex loss functionsStart with random weights (

β0andβ1) $\(\hat{y}_{i}=\beta_{0}+\beta_{1} X_{i}\)$Compute loss (i.e.

MSE) $\(M S E=\frac{1}{n} \sum_{i=1}^{n}\left(y_{i}-\hat{y}_{i}\right)^{2}\)$Update

weightsbased on thegradient

Questions¶